Need for Continuous Security and Compliance Automation

The significant increase in malicious attacks during recent years has forced organizations to shift their efforts from reactive to proactive, preventive to diagnostic approaches. Teams that integrate security practices through their entire software supply chain deliver software quickly, safely, and reliably. This means they can successfully achieve continuous security and compliance.

But this can be a challenging task for many organizations as they still rely on manual, outdated methods of compliance and security practices that impede software delivery performance. As a result, they experience compliance breaches late in the software delivery pipeline, resulting in costly mistakes that are difficult to correct.

Advent of DevSecOps

These were the issues that led to the birth of the ‘DevSecOps movement’. At the heart of its philosophy, DevSecOps emphasizes the need to integrate and automate security best practices within DevOps. But an often overshadowed aspect of security in DevSecOps is Compliance management. (more on DevSecOps here)

Because ‘security’ by itself is just a bunch of guidelines established either verbally or in-written. It is in fact ‘Compliance’ which enforces the security best practices upon DevOps teams. While DevOps improved the velocity of software deployment, DevSecOps is all about improving Deployment Velocity as well as Deployment Security. Before going further, I urge you to read this blog on how and why 100% compliance can be achieved only by Shifting Security to the Left.

DevSecOps Implementation with OpsMx

At OpsMx, we recommend integrating security into your continuous delivery pipeline, so as to a) improve software delivery, b) harden security posture, c) ensure compliance and d) boost operational performance by leveraging the following:

- Security testing

- Integrating information security reviews into every part of the software delivery lifecycle

- Using build pre-approved codes

Imagine for a moment if there were a continuous compliance and security toolchain. Within that compliance and security automation, OpsMx helps to integrate security and compliance as Compliance-as-a-Code into the Continuous Delivery pipeline.

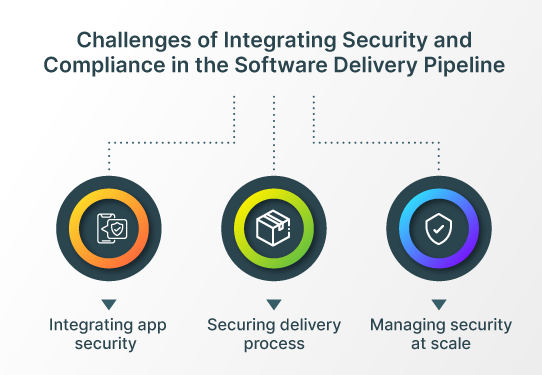

Challenges of Integrating Security and Compliance in the Software Delivery Pipeline

When trying to integrate security and policy governance into the software delivery pipeline, companies often face some common challenges. Some of the challenges that OpsMx customers face are-

- Integrate & automate Application Security into CI/CD

- Securing your Software Delivery process

- Manage Security at scale

- Improving Cohesion between Dev, Ops, Sec

Integrating app security

Application security is not restricted to ensuring the deployed code is secure. It also includes adhering to org-specific and industry-specific compliances and policies during development. In order to achieve this, teams must ensure that all artifacts used in software delivery are free of vulnerabilities, and certified accordingly by security scans. Such real-time assessment will help in faster triaging of issues(if any), and ensure faster approvals, without impacting deployment velocity.

Securing delivery process

The next challenge is to ensure that the delivery workflow itself is secure and compliant. Teams must focus on security right from code check-in to code deployment, be it – multi-cloud, on-prem or hybrid deployment. Automating policies to ensure separation of duties in application administration and deployment approvals, maintaining access control to the cluster via secrets used by an application, etc., are other challenges related to software supply chain security.

Managing security at scale

Oftentimes, vulnerabilities are reported in code that is already in production. The biggest challenge is in identifying such vulnerabilities and locating where in production such vulnerabilities are codified. In fact the challenge is not only in locating the issues, but also to quickly rectify them before much harm is done by threat actors.

Securing your software supply chain with OpsMx SSD

OpsMx SSD(Secure Software Delivery) helps in securing your software supply chain with the help of various modules such as Deployment Firewall, DBOM (Delivery Bill of Materials), Exception Handling, Policy Enforcement & Compliance Management, Audit and Accountability system, etc.

All of these modules ensure that:

- Bad code (or vulnerable code) is stopped from getting deployed to prod

- Secrets & passwords that are exposed in the code repo is highlighted and prevented from getting exposed in the CI/CD pipeline

- Exceptions are accommodated and time-boxed to be addressed at a later time

- Periodic audits are conducted and an accountability system is in place to showcase the security posture of the application

- Org-specific and industry-specific policies are defined and compliances are adhered to, and more…

While a few of these above mentioned points can be accomplished manually, but only at the cost of either deployment velocity, or security non-compliance or both.

Below are a few examples of how OpsMx has helped transform several companies to achieve their DevSecOps goals.

Improving Cohesion between Dev, Ops, Sec

The friction that used to exist between Developers and Operations Engineers is well documented for everyone to know. While this friction is not completely eliminated, adding ‘Security’ to DevOps is logically and practically only bound to increase the friction between Security pros and DevOps engineers. Getting people onboard with the newest philosophy is an ongoing challenge for most companies.

To make matters worse, in many companies ‘Security’ is just an afterthought. Since security pros come in at the very end, it is too late to lay down ground rules for security and painful to re-work from scratch.

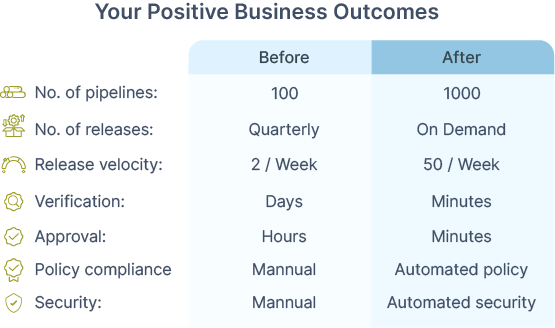

Positive business outcomes experienced by customers with OpsMx SSD

A cohort of companies who experienced major benefits, including the ability to manage the substantial increases in pipelines, thereby scaling their systems and increasing the volume and velocity of releases. By reducing the human toil and eliminating manual errors through intelligent automation, these companies have significantly reduced verification and approval times. And now, as we’ve automated compliance and security, we can help increase software delivery performance further. This, in turn, results in faster discovery, detection, and remediation of security violations and vulnerabilities.

Success Cases

A leading digital commerce player in Africa, Interswitch, was facing challenges with frequent innovation to provide sustainable payment solutions. Interswitch was instrumental in transforming Africa’s payment landscape but they were struggling with a number of problems. Some of them were-

1. Script-based deployment was time-consuming

Interswitch migrated from monolithic software to microservices and containerized applications. Initially, their teams wrote numerous scripts using Jenkins and SSH plugins to automate deployments into multiple environments. Eventually, their pace of innovation increased and the non-standard deployment process (required for maintaining these scripts) made software deployments slow and complex.

2. Complying with Financial Regulations-

Operating in the financial industry it was imperative that Interswitch strictly complies with many regulations and standards. This was particularly challenging for the IT team to manually address policy checks and adhere to SDLC regulations set by the compliance team. Moreover, auditing and investigating non-compliance issues was a cumbersome task for their compliance managers.

3. Lack of Secured Delivery

Interswitch stores and processes personal information in their own data centers, so it was necessary that they implement ways to reduce the risk of loss, unauthorized access, and leak of personal information. Their IT team uses firewalls and data encryption mechanisms to control access to its data centers. For security reasons, they have their production Kubernetes clusters configured in on-prem data centers.

However, there was no proper mechanism to deliver applications automatically into secured clusters. That’s when they realized that they needed a streamlined, modern continuous delivery process that eliminated excessive dependence on tribal knowledge in order to adhere to compliance requirements as well as handle their scale.

How Interswitch improved both Deployment Velocity and Security Posture with OpsMx SSD

Interswitch adopted OpsMx SSD, a scalable, modern continuous delivery platform to speed and safely deploy apps into Kubernetes through the SSD platform. With OpsMx, Interswitch has successfully automated its end-to-end software delivery pipeline, seemingly integrating its DevOps toolchain. This includes a code management system (Bitbucket), a CI system ( Jenkins), the vulnerability scanning tool (SonarQube), and an artifact repository (Jfrog).

Now, Interswitch’s developers and DevOps engineers are using OpsMx SSD to securely deploy applications into the cloud and on-prem Kubernetes behind the firewall. As a result, Interswitch is able to deliver applications at scale, with nearly 100 developers, 10 DevOps engineers, and project managers using OpsMx SSD to orchestrate the deployment and delivery of their payment applications. Most importantly, their DevOps team is automating Canary analysis seamlessly and estimating the risk of new releases easily.

OpsMx Autopilot automatically gathers metrics of Canary and baseline pods and applies AI ML to perform a risk assessment and enables automated decisions to roll out or rollback based on the risk score. Additionally, Autopilot is also helping the DevSecOps teams address regulatory and compliance concerns by allowing them to define, automate and enforce policies within their delivery pipeline.

All software passes through these automated policy gates, thereby ensuring security and compliance before reaching production. As a result, their code check into production times has now reduced from days to hours, as everything is streamlined under one umbrella, helping them reduce at least 70% of lead time.

With OpsMx, Interswitch has gained the ability to identify deployment failures in the process of software delivery with end-to-end visibility. And we track the progress at all times from Dev through UAT to production their compliance stakeholders get an audit report for a particular time period. This gives them insights into policy violations, pipeline failures, or bad deployments.

Success Case 2- Symphony Communication Services

Symphony is a major player in the financial services industry, acting as the collaboration hub for large (and small) firms across banking, brokerage, and wealth management. Since their collaboration platform is highly regulated and renders services for millions of customers, meeting these rigorous standards (est. by the governing authorities) means maintaining the utmost level of security and regulatory compliance. Symphony needed to reduce development costs, deploy faster, as they turned to OpsMx for help.

In addition to it, they wanted to make an architectural change to their codebase that would enable a multi-tenant system allowing them to allocate dedicated resources to each client to achieve maximum performance. One particular pain point with their existing system was using a CI value stream that was creating a bottleneck in achieving maximum performance.

This was because their distributed SaaS platform with an ever-growing number of pipelines and software delivery environments was increasing the burden on human resources and skyrocketing infrastructure costs. So, maintaining compliance standards with an increased scale of the operation was becoming quite difficult.

Most importantly, any potential security vulnerability introduced during delivery could cripple the business. So to avoid this, they looked for a new modern continuous delivery solution for both infrastructure and software that could retrofit into their existing platform. And of course, they wanted to accomplish all of this within 90 days.

So OpsMx addressed a number of challenges that Symphony was facing :

- Lack of an agile infrastructure

- Lack of a hardened Continuous Delivery platform with security features

- They needed to meet business demand

1. Lack of an agile infrastructure

Previously Symphony applied changes to their production system using manual steps and complex scripts on Terraform. But idling systems were hard to manage, and it was inflating costs. Finally, the process for deploying new infrastructure to onboard new customers was becoming too long. Instead, they needed a significantly shorter and simplified deployment process.

2. The requirement to meet business demand

In order to attain a higher velocity of deployments, Symphony needed to secure the software delivery. They needed a platform that could help them put together a verification check through the entire delivery process and provide useful insights into the risks informing approvals and maintaining velocity.

3. Lack of Secured Delivery

The process of moving changes from development to test and finally to production was complex. Tenant infrastructure deployment failed to keep pace with their needs and clients had to wait for their product. With expanding pipelines, their SREs were burdened with troubleshooting which increased the triage time as well. So, they needed a platform that could guide the SREs to the problem area, rather than wasting their time troubleshooting the source of the problem.

Symphony automated the onboarding of new tenants by automating the entire CI/CD process by using the OpsMx ISD platform. They increased deployment from 2-3 updates per week to 50 per day. This allowed Symphony to reduce the time to onboard new clients by 98%. Now it takes hardly 10 minutes to get the infrastructure and platform ready for the client. Finally, as Symphony continuously increases the number of services provided to customers, these automated pipelines with granular access control ensure safety and security.

Additionally, OpsMx also addressed cost optimization by integrating with Terraform. Due to the deep integration of OpsMx and Terraform, they now combine database, infrastructure, and software updates in an automated pipeline. This slashed their infrastructure costs because they are able to automatically provision and de-provision their dev, test, and staging environments.

This was also a big enabler for them shipping faster, since developers no longer needed to wait for a DevOps engineer to build test environments. They’re also using the security framework from OpsMx for the development, test, and the SRE team of delivery intelligence data and delivery intelligence layer Autopilot which relieves the burden that the SRE has previously had to manage.

OpsMx also allowed them to define and enforce policies through their pipeline with AI algorithms, managing compliance checks at every stage of the pipeline. And this red flags erroneous codes or configurations which notify the SRS. This, in turn, frees their SREs from the daily toil of troubleshooting by red-flagging any events. Thus, teams have seen a significant improvement in delivery velocity, without compromising security.

Automating Policy enforcement through Compliance-as-Code

Staying compliant in a cloud-first world is a ubiquitous challenge not just for startups but also for enterprises. Many organizations fail to achieve continuous security and compliance and as a result succumb to ad hoc, time-consuming and error-prone processes. Traditionally, they relied upon a quick checklist of scripts for achieving their compliance in software delivery.

Of course, this exposes the organization to business risks. Most organizations agree that they need to improve their speed of delivery which is why it is important to eliminate manual steps or hard coding checks and also provide better visibility into the compliance checks in the delivery process.

This is where Compliance-as-Code comes into the picture. Compliance-as-Code refers to the practices that allow DevSecOps to embed the three core activities at the heart of compliance: prevent, detect, and remediate. It enables automation and reliably enforces governance and security policies across the delivery pipeline.

For instance, enforcing Sarbanes Oxley (SOX) compliance in the software delivery process, or specific role-based access control in providing the approvals/ checks before deploying to production. These approvals for SOX compliance, for example, could be direct or indirect in the sense that a developer checking in the code to Git triggers a pipeline which needs to be approved by another person (typically a senior/ manager) to deliver the code.

Another set of approvals can come from the quality issue on the operations where you find the data for the testing or security checks required for the delivery and approval to be deployed to the production. This approval needs visibility into what checks were performed, and the results of the checks, and they can be approved through Slack and chat Ops.

The visibility for the security checks can be static code analysis or binary scans, or dynamic code analysis. Also, when evaluating performance and functional tests, asking them to deploy to production can be helpful. The visibility requires the data verification on the delivery platform and the results of these tests to make that decision.

The ability to fetch this data and the defined policies empowers organizations to deliver faster. And these policies that one defines are done by the people from the central organization that may be different from the people who are actually configuring the delivery pipeline. Therefore, as a practice, organizations must refrain from configuring these policies in text form in a document, instead of enforcing them as rules that can be used and applied as code can help.

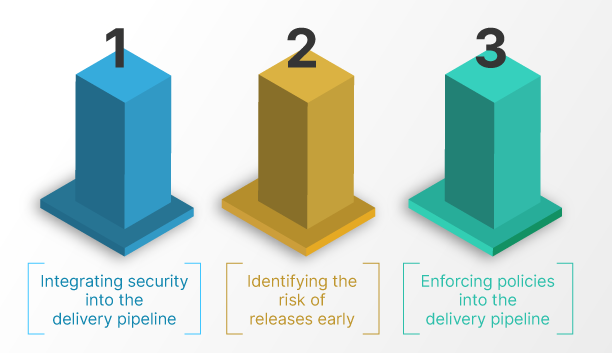

Enforcing Compliance into the Delivery workflow

While enforcing compliance, there are certain sequential steps that take place within the delivery workflow. First, there is a build step, then a static code analysis step, then an integration step then dynamic code analysis, and then a binary scan. These are individual steps done in a certain sequence. Some of these intangibles are not in sequence. Conceptually, there are three steps key to achieving security and compliance automation:

a. Provide guardrails in the delivery pipeline, ensuring the necessary checks are included in the pipeline.

b. Provide a gating mechanism. If these checks fail, we should stop the delivery from going to production, and have those issues resolved before they get deployed.

c. Define and automate specific policies that are set up within these pipelines.

0 Comments