Today, almost every company we talk to has adopted microservice architecture and needs to deploy its apps to Kubernetes clusters speedily. The Argo open-source project becomes their first choice of CD tool for DevOps folks who want to deploy their code using GitOps methodology. In this blog, we will see how to perform canary rollouts and deploy Kubernetes apps safely using Argo Rollouts and OpsMx Autopilot.

What are Argo Rollouts and how does it work?

Argo Rollouts is a Kubernetes controller and set of CRDs, used for progressive delivery like blue-green deployments and canary deployments.

Argo Rollouts is a part of the Argo open source project.

The CRDs developed by Argo Rollouts is a custom workload resource that abstracts the Kubernetes Deployment workload resource.

We will see how Argo Rollouts performs canary analysis further, but in a nutshell:

- It integrates with ingress controllers and service meshes for advanced traffic routing to gradually shift traffic to the new version during an update.

- It can query and interpret metrics from various providers to verify key KPIs and drive automated promotion or rollback during canary or blue/green deployments.

Read more about Argo Rollouts here.

Challenges with Argo Rollouts

Argo Rollouts uses monitoring tools such as Prometheus, DataDog, NewRelic, and Wavefront for monitoring metrics. But there are specific challenges to performing canary analysis in production environments:

- For metric analysis and verification, Argo Rollouts natively supports an open-source service called Kayenta. However, Argo Rollouts does not provide any integration out of the box to perform quality regression through log-analysis.

- With Argo Rollouts, SREs and Platform team may not be able to triage the risk of the release process and resolve issues in production.

- Argo Rollout or Argo CD does not provide any provision to check for abnormalities in production after deployment. Hence to make a decision for Canary analysis, one has to depend on manual verification.

To avoid releasing risky software into production, OpsMx provides Autopilot that can be integrated with Argo Rollouts to move software into Kubernetes safely.

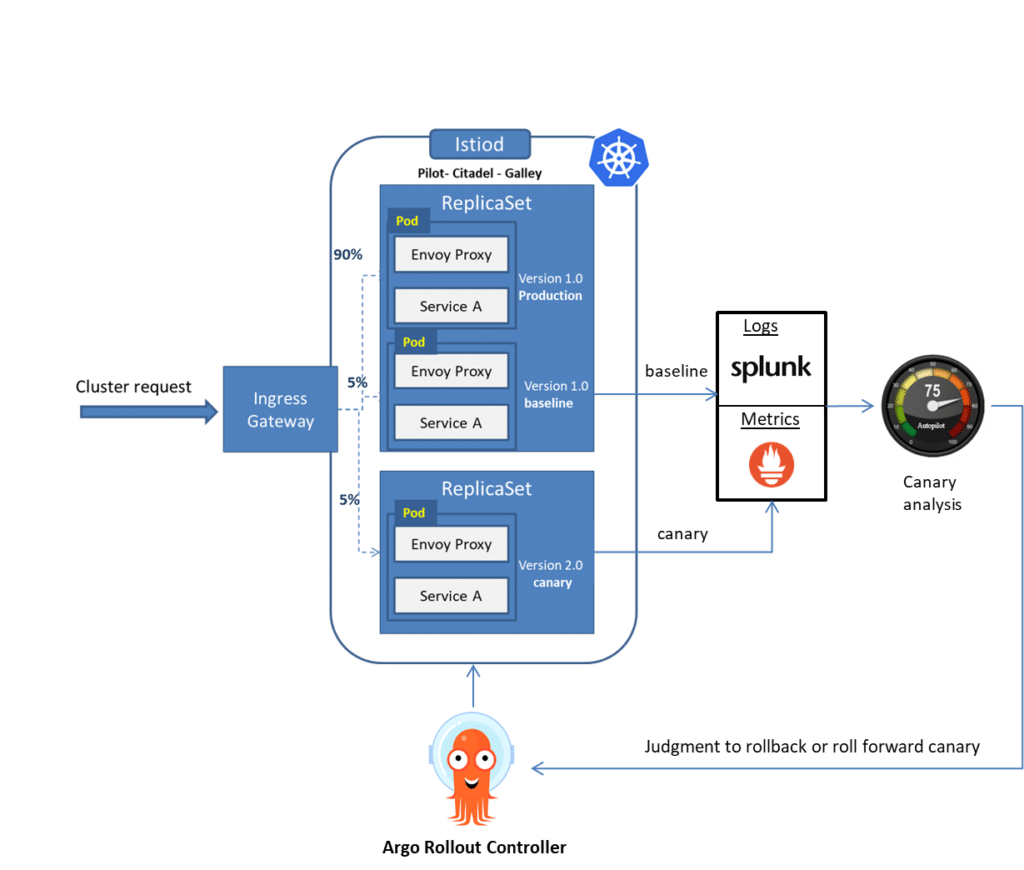

How does the integration between Argo Rollouts and Autopilot work for verifying canary

Canary deployments involve splitting the traffic between pods containing the previous version of releases (including baseline) and new releases

Although the Kubernetes proxy server is responsible for receiving and splitting external requests or traffic, Argo Rollouts has defined CRD. You can choose to define the traffic routing rules such as setting weight with pause duration or dynamic canary scaling etc. But for this blog, we have considered the most straightforward version or experiment-based canary release (splitting the traffic among the pods in a round-robin fashion).

Once the traffic is split into various pods using an ingress gateway, the performance, and quality of an application can be tracked using monitoring and logging tools. And the data can be sent to Autopilot for further analysis. Based on the analysis, Argo Rollout can roll back or roll-forwards a release. ( refer to the image below)

OpsMx Autopilot provides the intelligence for CI/CD processes to deliver software safely and securely. Autopilot uses AI/ML to analyze logs, metrics, and other data sources to identify the risk of all changes, automatically determining the confidence that an update can be promoted to the next pipeline stage without introducing errors. Autopilot also automates policy compliance, ensuring that all your governance rules and best practices are followed. Autopilot reduces errors in production, increases release velocity, and improves security, quality, and compliance.

After analyzing a release, Autopilot sends back the results, and Argo Rollouts decides to either abort or progress the release ( refer to the images below). The best part is Autopilot can fetch logs and metrics from many tools such as Splunk, Sumo Logic, Appdynamics, etc., and highlight the risk of a release in production. If there are any problems in the new application in the show, such as latency issues or SQL connection issues, etc., it can be quickly rolled back.

The primary benefit of integrating Autopilot with your Argo Rollout is:

- Autopilot helps you catch errors as soon as possible, before customers notice, and make a quick transition back to the older version.

- SRE gets the much-needed visibility and insight into the most probable cause of release errors and resolves them faster.

- Leverage AI/ML analysis for faster and accurate estimation of risk of your release

If you want to better understand how Autopilot uses machine learning and NLP techniques to find out risk, please read this blog.

Let us understand how you can implement canary with Argo Rollout and Autopilot.

Implementing Canary with Argo Rollout and Autopilot

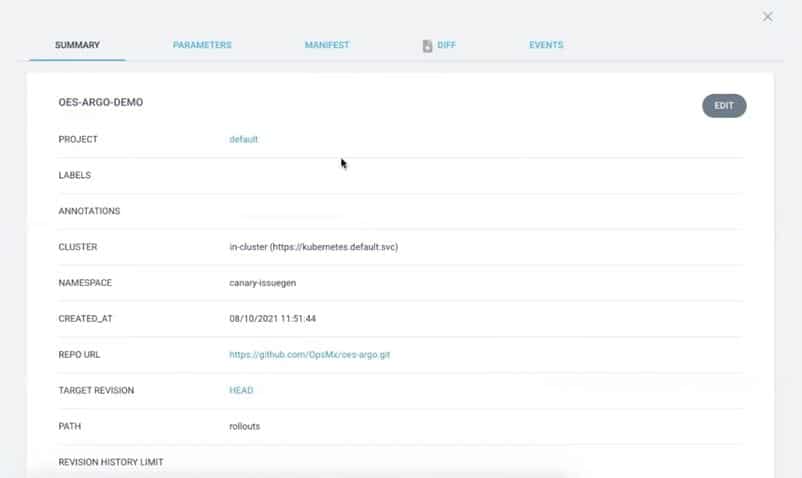

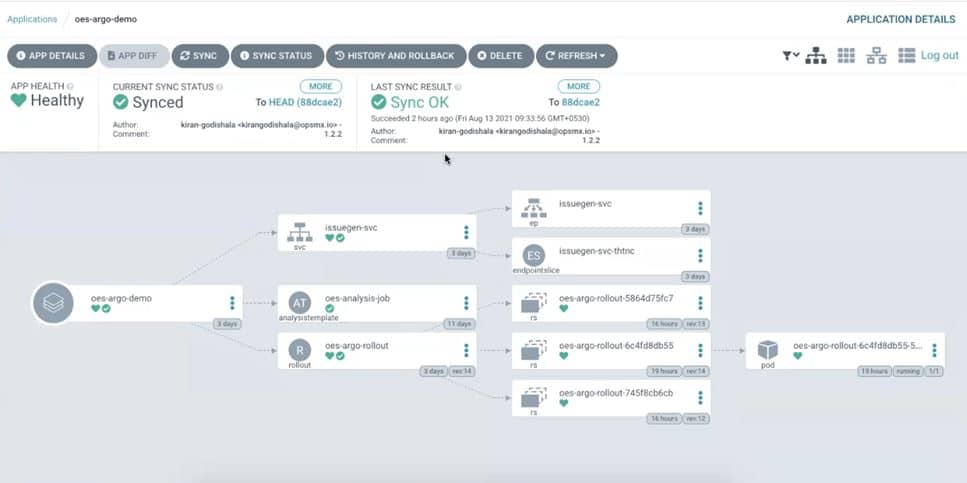

With Argo Rollout, canary deployment is relatively easy. For the sake of simplicity, we have created an application in Argo CD called ‘oes-argo-demo’ that contains manifests including rollout manifest.

Steps to the implementation of canary involve three steps:

- Create configuration files

- Configure the application in Argo CD

- Deploy changes with canary analysis

Step-1: Creating configuration files for canary

a. Define Service yaml file

b. Define Rollout yaml file

c. Define AnalysisTemplate

Define Service yaml file (Issuegen-svc)

We are planning to install the application issue-gen into a pod and for that we have to create a service.yml (image below) to direct the external and internal traffic to the pod.

apiVersion: v1

kind: Service

metadata:

name: issuegen-svc

labels:

app: oes-argo-rollout

spec:

selector:

app: oes-argo-rollout

ports:

- port: 3100

targetPort: 8088

type: ClusterIP

Define Rollout yaml file (oes-argo-rollout)

Argo Project has created a custom resource definition (CRD) called Rollout which acts like an abstraction to Deployment workload resources in Kubernetes. We will configure the oes-argo-rollout resource to install issuegen 1.2.5 version into a pod. The current application that is running in the node is issuegen 1.2.2. Now rollout will initiate two new pods with issuegen 1.2.5 and issuegen 1.2.2; the former is the canary pod and the latter is the baseline pod. Rollout will take care of splitting the traffic in round-robin fashion. If the current pods are 8, then after the experiment begins, two more pods one(baseline) with 1.2.2 version and the other(canary) with 1.2.5 will be created and hence the traffic split will happen according to the pod count i.e., 80% of the traffic will go to the existing pods (or the current 1.2.2 version) and 10% of the traffic will go to each of the newly created pods- baseline(1.2.2 version) and canary(1.2.5 version).

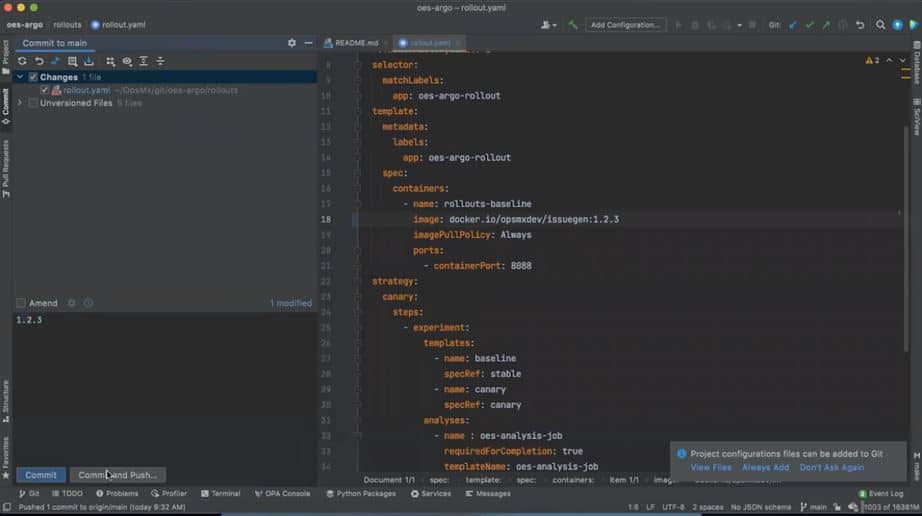

You can refer to the oes-argo-rollout in the below image

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: oes-argo-rollout

spec:

replicas: 1

revisionHistoryLimit: 2

selector:

matchLabels:

app: oes-argo-rollout

template:

metadata:

labels:

app: oes-argo-rollout

spec:

containers:

- name: rollouts-baseline

image: docker.io/opsmxdev/issuegen:1.2.5

imagePullPolicy: Always

ports:

- containerPort: 8088

strategy:

canary:

steps:

- experiment:

templates:

- name: baseline

specRef: stable

- name: canary

specRef: canary

analyses:

- name : oes-analysis-job

requiredForCompletion: true

templateName: oes-analysis-job

args:

- name: experiment-hash

valueFrom:

podTemplateHashValue: Latest

- name: baseline-hash

value: "{{templates.baseline.podTemplateHash}}"

- name: canary-hash

value: "{{templates.canary.podTemplateHash}}"

The oes-argo-rollout resource will call another custom resource called Analysis template. Let us see how to define the Analysis template next.

Define AnalysisTemplate YAML file (oes-analysis-job)

AnalysisTemplate is a CRD by Argo Project that allows users to write specs on how to perform the canary analysis- what all metrics and values it should consider. You find the specs used by us in the below image, or you can download the YAML file from here.

OES-analysis-job is the AnalysisTemplate written to instantiate a pod for analysis only when there is a new version committed in the Github. The new pod which will instantiated will execute verifyjob v5 ( this is a job we have written to send the metadata of metrics and logs sources of baseline and the canary to OpsMx Autopilot)

kind: AnalysisTemplate

apiVersion: argoproj.io/v1alpha1

metadata:

name: oes-analysis-job

spec:

args:

- name: experiment-hash

- name: canary-hash

- name: baseline-hash

metrics:

- name: oes-analysis-job

count: 1

provider:

job:

spec:

backoffLimit: 0

template:

spec:

restartPolicy: Never

containers:

- name: oes-analysis-job

image: opsmx11/verifyjob:v5

imagePullPolicy: Always

env:

- name: EXPERIMENT_HASH

value: "{{args.experiment-hash}}"

- name: BASELINE_HASH

value: "{{args.baseline-hash}}"

- name: CANARY_HASH

value: "{{args.canary-hash}}"

- name: LIFETIME_HOURS

value: "0.05"

- name: LOG_ENABLED

value: "false"

Once you have created the files, you can create an application in the Argo.

Step-2: Configure application in Argo CD

We will create an application called ‘oes-argo-demo’ in Argo CD UI. We will run an image called issue-gen. First, let us see how the application is created in Argo CD. You need to provide Kubernetes cluster name, namespace, Github link where manifest files are kept.

Once we have configured the application the Argo UI would show the applications like the below:

Step-3: Deploy changes with canary

Whenever changes are made to the Git, e.g. we would change the version name from issuegen 1.2.2 to 1.2.3, then Argo would populate an “out-of-sync“ status and prompt to synchronize the changes in Git with the production state.

When we hit sync, then Argo will create an experiment object which creates two Replicasets which in turn create one pod each – baseline and canary. And then, an analysis job will be triggered to send the metrics to Autopilot.

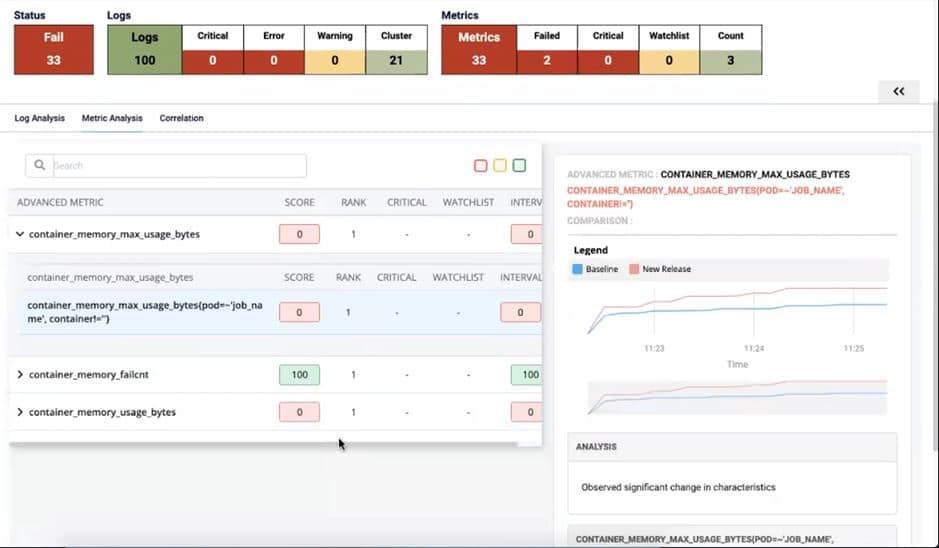

Autopilot fetch the logs (from Splunk) and time-series metrics ( from Prometheus) to provide a risk analysis report of the application:

In the above image, Autopilot highlights that the log analysis has passed successfully, but some critical error is in the metrics. For diagnosis purposes, Autopilot showcases that metrics such as container_memory_max_usage_bytes of canary are above the acceptable threshold container_memory_max_usage_bytes of baseline.

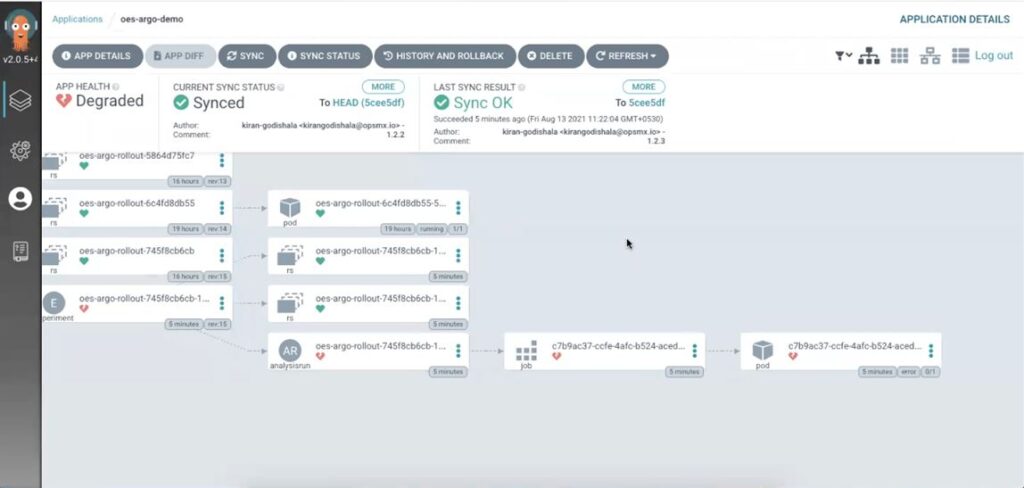

This risk assessment and judgment to fail is sent from Autopilot to Argo CD, and the rollout is failed or degraded ( refer to the screenshot below)

Suppose you want to roll out a new release to production safely using Argo Rollout. In that case, you can integrate Autopilot to provide you with canary analysis and judgment to roll forward or roll back your release.

In case you are interested, you can try the Autopilot community edition for free.

to learn more about advanced deployment strategies using Argo CD!

About OpsMx

Founded with the vision of “delivering software without human intervention,” OpsMx enables customers to transform and automate their software delivery processes. OpsMx builds on open-source Spinnaker and Argo with services and software that helps DevOps teams SHIP BETTER SOFTWARE FASTER.

0 Comments