What is CI/CD?

CI/CD stands for Continuous Integration and Continuous Deployment (or Delivery). CI/CD is a software delivery concept and a core aspect of DevOps that automates multiple stages within the DevOps workflow.

There is no standard definition for CI/CD, but in modern day software delivery/ deployment, CI/CD is considered to be the heartbeat of a well-oiled DevOps process. It enables automation and high-velocity software delivery with improved reliability and availability. But in order to truly understand CI/CD, you need to familiarize yourself with a few terminologies.

Software Delivery vs CI vs CD vs CI/CD Pipeline

Since a CI/CD pipeline is a complex process, let me first address the overarching theme i.e Software Delivery. I’ll start off by explaining what is a software delivery pipeline and then define other concepts in this process such as CI, and CD.

What is a software delivery pipeline?

A software delivery pipeline is a series of dependent stages through which a code/package/artifact flows from the developer’s system to a production server. The journey from the developer’s system is not simple, and the code has to pass through multiple stages before we deploy it into production.

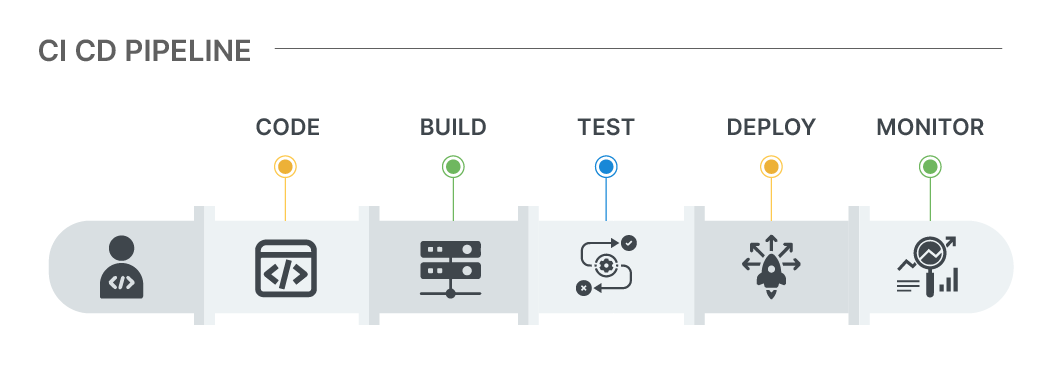

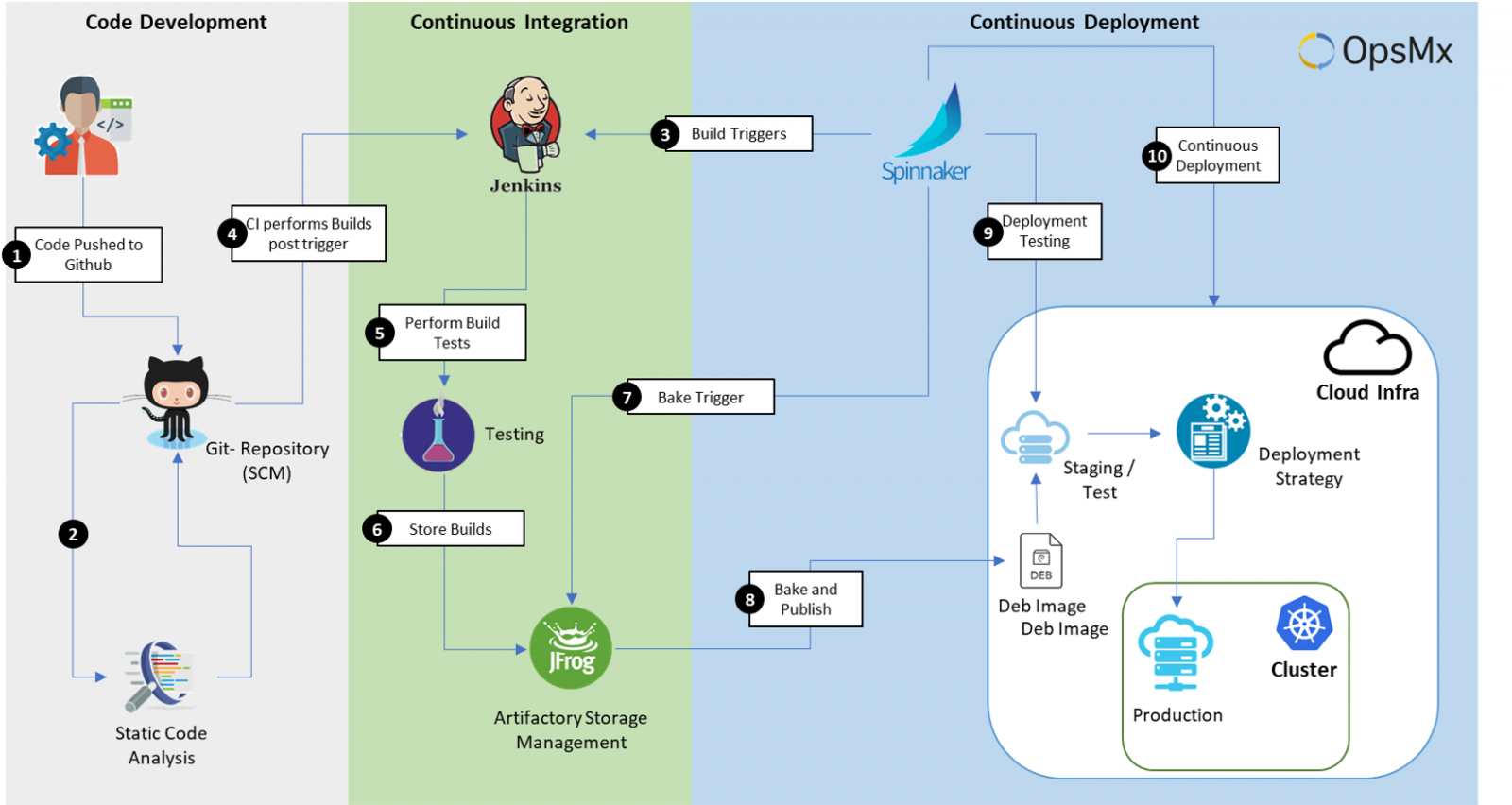

The code will progress from code check-in through the test, build, deploy, and production stages. Engineers over the years have automated the steps for this process. The automation led to two primary processes known as Continuous Integration (CI) and Continuous Delivery (CD), which make up a CI/CD pipeline. Before further ado, let me define CI and CD.

What is CI and CD?

CI and CD are concepts in DevOps, which facilitate multiple codebase changes to be made simultaneously. To be more specific…

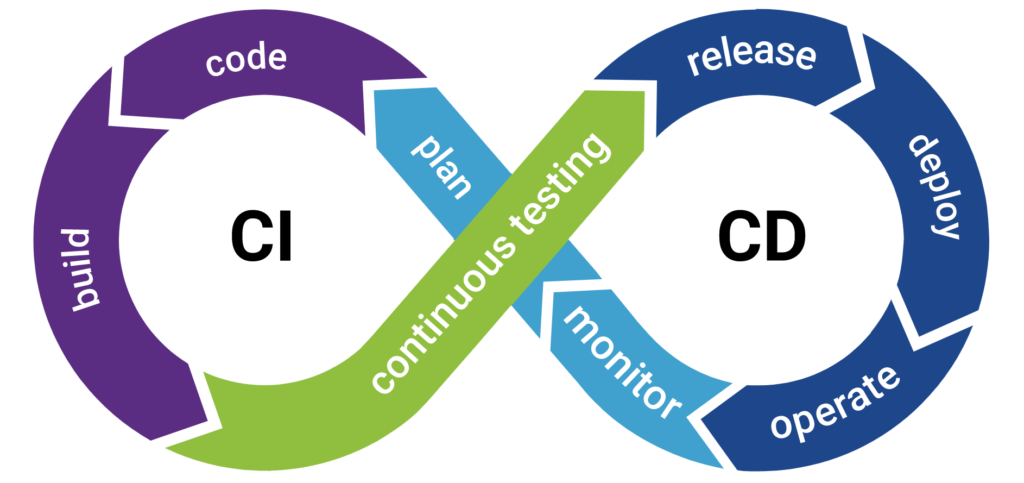

What is Continuous Integration (CI)?

CI is the practice of integrating all your code changes into the main branch of a shared code repository, automatically testing each change during commit or merge, and then automatically kicking off a build.

CD is a software development practice that works in conjunction with CI to automate the infrastructure provisioning and application release process.

What is a CI/ CD pipeline?

A CI/CD pipeline is defined as a series of interconnected steps that include stages from code commit, testing, staging, deployment testing, and finally, deployment into the production servers. We automate most of these stages to create a seamless software delivery.

Before we go any further, you must understand why CI/CD is important to DevOps

Tabular comparison between Software Delivery vs CI vs CD vs CI/CD Pipeline

| Software Delivery | CI | CD | CI/CD Pipeline | |

| Objective | The overall process of delivering software to end users or a production environment | The process of automatically integrating code changes into a shared repository and running automated tests | The process of automatically deploying code to a production environment after passing all tests | A set of practices and tools that automate the entire software delivery process, including integration, testing, and deployment |

| Automation | The overall process may involve manual steps and lacks complete automation | Focuses on automating the integration and testing of code changes | Automates the deployment process but may require human approval before releasing to production | Automates the entire software delivery pipeline, from code integration to testing and deployment. |

| Human Intervention | Requires more human intervention, especially in the deployment phase | Automated code integration and testing with minimal human intervention | May involve human approval before production deployment in CD. | May include manual approval gates, but the process is largely automated |

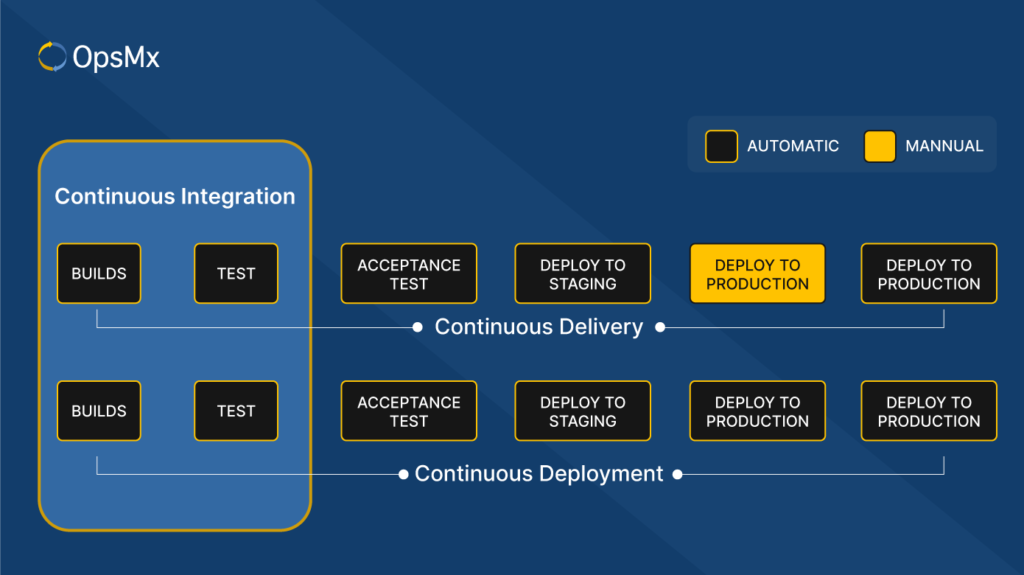

Continuous Delivery vs Continuous Deployment: How different are they?

It is easy to confuse Continuous Delivery with Continuous Deployment. The primary difference between Continuous Delivery and Continuous Deployment is the level of automation and human intervention in the release process.

Continuous Delivery includes manual approval before deploying to production, while Continuous Deployment automates even the deployment process, pushing code changes to production as soon as they pass automated tests.

In Continuous Delivery, while code changes are automatically built, tested, and prepared for deployment, the actual deployment to the production environment requires manual approval. After automated testing and validation in a staging or pre-production environment, a release manager or team decides when and if the code should be deployed to production. This provides an additional layer of control and allows for last-minute checks and approvals. In summary, Continuous Delivery provides a balance between automation and control.

However Continuous Deployment automatically deploys every code change that passes automated tests and quality checks to the production environment without manual intervention. The intent here is to maximize automation by minimizing the time between writing code and making it available to users. This approach is often used for web applications, where rapid updates and bug fixes are essential. Elimination of manual release bottlenecks, results in faster delivery of new features and bug fixes.

CI/CD Benefits

Top 5 Benefits of a CI/CD Pipeline

- Faster Development: A CI/CD pipeline automates manual processes, thereby reducing the time it takes to release new features or bug fixes.

- Improved Quality: Continuous testing and integration catch and address issues early, leading to more reliable software.

- Better Collaboration: Developers can work in smaller, more manageable code increments, reducing conflicts and making collaboration smoother.

- Reliable Deployments: Automation reduces the risk of human error during delivery, leading to more consistent and predictable releases.

- Rapid Feedback: Developers receive quick feedback on their code changes, allowing them to fix issues before they become major problems.

Components of a CI/CD Pipeline

In a CI/CD pipeline, the different stages fall broadly under two different phases of the software development lifecycle: CI and CD. While there are other phases and stages within the development process, the scope of this article is limited to the stages within the CI and CD phase.

The CI phase consists of the following stages:

- Build

- Unit Tests

- Integration Tests

The CD phase consists of the following stages:

- Release

- Deploy

- Operate

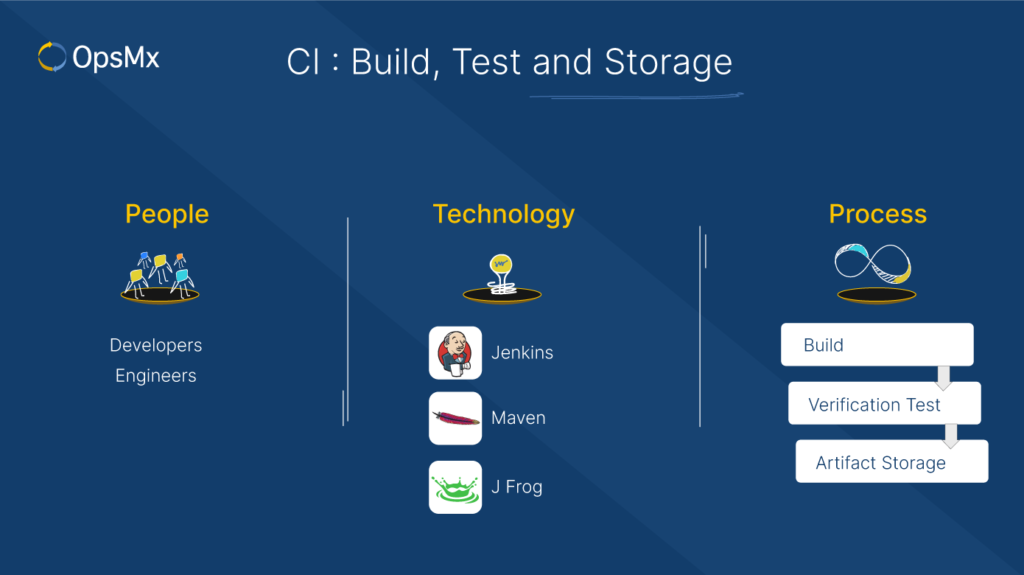

Now let’s understand what happens in each of these stages with the help of PPT (People, Process, Tools) framework along with the professionals that will be a part of the respective processes.

Understanding the different stages of CI (Continuous Integration)

As explained earlier, CI is where the code updates and changes are collected from a developer (or a group of developers) and merged with the original source branch. The various stages are:

1. Build

- People involved: Developers and Engineers

- Tools involved:Jenkins, Maven, Bamboo CI, Circle CI, Travis CI, Maven, Azure DevOps

Process:

The “build stage” in the CI pipeline is where the source code is compiled, packaged, and prepared for deployment. This stage is primarily focused on transforming the human-readable source code into executable or deployable artifacts, which can then be tested and eventually deployed to various environments, including production

This is a critical stage because it ensures that the software is consistently and correctly built, making it ready for testing and deployment. It also helps catch integration issues and potential bugs early in the development process, reducing the likelihood of issues in later stages.

Build Verification Tests (BVT) typically happen immediately after the build is created to verify whether all the modules are integrated properly and critical functionalities are working fine.

2. Unit Tests

- People involved: Testers, QA Engineers, Database Administrators (DBA)

- Tools involved: JUnit, NUnit, pytest, TestNG, etc.

Process:

Post BVT checks, a Unit test (UT) is added to the pipeline to further reduce failures during production. Unit Testing focuses on validating individual components or units of code in isolation against their expected behavior.

Unit Testing is an essential step in CI phase since it is executed every time code changes are committed to the version control system, ensuring that new code does not introduce defects into the software. It individually assesses the smallest parts of an application’s code, also referred to as “units”.

3. Integration Tests

- People involved: Testers, QA Engineers, Database Administrators (DBA)

- Tools involved: Selenium, Cucumber, TestNG, Appium, Jmeter, SOAP UI, Tarantula, Postman, etc

Process:

Integration tests are an essential part of the CI phase which test the interactions between different components or units of a software application. Unlike unit tests, which focus on testing individual code units in isolation, integration tests verify that these units work correctly when combined and interact with one another.

This is a crucial step because it helps identify issues that may not be apparent during unit testing, instead focussing on how different parts of the application collaborate, share data, and communicate. Thus they ensure that different parts of the application work together as expected.

3.1 Build Verification Test (BVT)/Smoke Tests and Unit Tests:

Smoke testing or BVT is performed immediately after the build is created. BVT verifies whether all the modules are integrated properly and the program’s critical functionalities are working fine. The aim is to reject a badly broken application so that the QA team does not waste time installing and testing the software application.

Post these checks, a Unit test (UT) is added to the pipeline to further reduce failures during production. Unit Testing validates if individual units or components of a code written by the developer perform as per expectation.

3.2 Artifactory Storage:

Once a build is prepared, the packages are stored in a centralized location or database called Artifactory or Repository tool. There can be many builds getting generated per day, and keeping track of all builds can be difficult. Hence, as soon as the build is generated and verified, it is sent to the repository for storage. Repository tools such as Jfrog Artifactory are used to store binary files such as .rar, .war, .exe. Msi, etc. From here, testers can manually pick, deploy an artifact in a test environment to test.

4. CI- Test Stages: People Process and Technology:

- People: Testers, QA Engineers

- Technologies: Selenium, Appium, Jmeter, SOAP UI, Tarantula

Process:

Post a build process, a series of automated tests validate the code veracity. This stage prevents errors from entering into production. So, depending upon the size of the build, this check can last from a few seconds to hours. In large organizations where multiple teams are involved, these checks are run in parallel environments which save precious time and notify developers of bugs early.

These automated tests are set up by testers (known as QA engineers) who have set up test cases and scenarios based on user stories. They perform regression analysis, stress tests to check deviations from the expected output. Some activities that are associated with testing are Sanity tests, Integration tests, and Stress tests. This is an advanced level of testing. To sum up, this testing process helps reveal issues that were probably unknown to the developer while developing the code.

4.1 Integration Tests:

Tools such as Cucumber, Selenium, and many more enable QA engineers to perform integration tests by combining individual application modules and testing them as a group while evaluating their compliance against specified functional requirements. Eventually, someone needs to approve the set of updates and move them to the next stage which is performance testing. And even though this verification process can be cumbersome, still, it is an important part of the overall process. Thankfully, there are some emerging solutions to take care of the verification process. The Delivery Intelligence module of the ISD platform by OpsMx is one such solution.

4.2 Load and Stress Testing:

One of the primary responsibilities of QA engineers is to ensure that an application is stable and performing well when exposed to high traffic. To drive this, they perform load balancing and stress testing using automated testing tools such as Selenium, JMeter, and many more. However, this test is not run on every single update, as full stress testing is a time-consuming process. So, whenever teams need to release a set of new capabilities, they usually group multiple updates together and run full performance testing. But in other cases, when only a single update has to be moved to the next stage, the pipeline may include canary testing as an alternative.

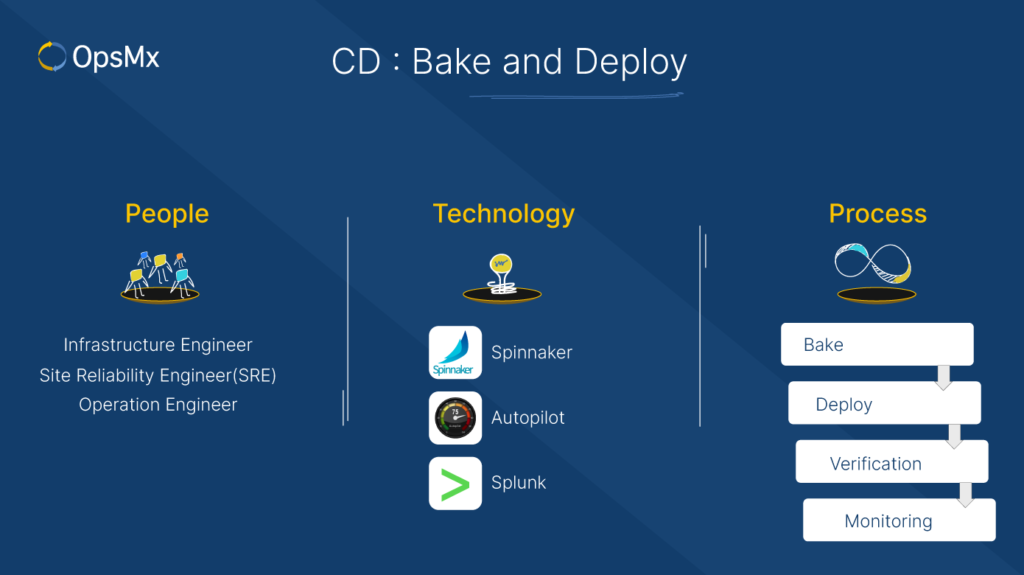

Understanding the different stages of CD (Continuous Deployment/ Delivery)

As explained earlier, CD is where code changes for new features, configurations, bug fixes, and experiments are deployed into production safely. The various stages are:

1. Release & Deploy

- People involved: Infrastructure Engineers, Site Reliability Engineers (SRE), Operation Engineers, Release Engineers

- Tools involved: OpsMx Secure CD, Spinnaker, Argo CD, Tekton CD

Process:

Both ‘Release’ & ‘Deploy’ are similar and related concepts used interchangeably, but they represent different steps in the process of making your software changes available to users or customers. These stages have distinct purposes and often different levels of automation and control.

Once the code has completed its journey through the testing stage, it is safe to assume that it is now qualified to be deployed into the servers, where it will merge with the main application. But before getting deployed into production, it will be deployed into the test/staging or a beta environment that is internally tested by the product team.

While ‘Deploy’ refers to the act of moving new code (for new features) from one environment to another, ‘Release’ means having it generally available for end users. So this stage can be summarized as the process of making new code changes/ new features available in a target environment or for end users.

1.1 Bake

“Baking” refers to creating an immutable image instance from the source code with the current configuration at the production. These configurations can be a range of things such as database changes or other infrastructure updates. So, either Spinnaker can trigger Jenkins to perform this task, or in other instances, some organizations prefer a Packer to achieve this.

1.2 Deploy

Spinnaker will automatically pass the baked image to the deploy stage. This is where the server group will be deployed to a cluster. A functionally identical process is carried out during the Deployment stage similar to the testing processes described above. Deployments are first moved to test, stage, and then finally to production environments post approvals and checks. This entire process is handled by tools like Spinnaker.

1.3 Deployment Testing and Verification

This is also a key phase for teams to optimize the overall CI/CD process. By now, the code has undergone a rigorous testing phase, so it is rare that it should fail at this point. Even then, if it fails in any case, teams must be ready to resolve the failures as quickly as possible, so as to minimize the impact on end customers. Furthermore, teams must consider automating this phase as well. Deploying to production is carried out using deployment strategies like Blue-Green, Canary Analysis, Rolling Update, etc. During the deployment stage, the running application is monitored to validate whether the current deployment is right or it needs to be rolled back.

2. Operate

- People involved: ite Reliability Engineers (SRE), Product Manager, Customer Success Manager

- Tools involved: No specific tools as such. SREs however will leverage monitoring. logging tools to analyze system performance/span>

Process:

The ‘Operate’ stage is sometimes combined with the ‘Monitor’ stage, but they are essentially different phases in the life cycle. While CI/CD primarily focuses on the development, testing, and deployment phases of the software lifecycle, the ‘operate’ stage extends the process into the operational phase, where the software is running in a production environment.

The main purpose of this stage is to manage and maintain the software in a production (or other) environment. It includes tasks related to monitoring, maintaining, and optimizing the software to ensure it performs well, is available to users, and remains secure and reliable.

This is another crucial phase because it helps other non-technical stakeholders provide valuable insights and feedback for continuous improvement, and most importantly sets the stage/ expectations for continuous monitoring processes.

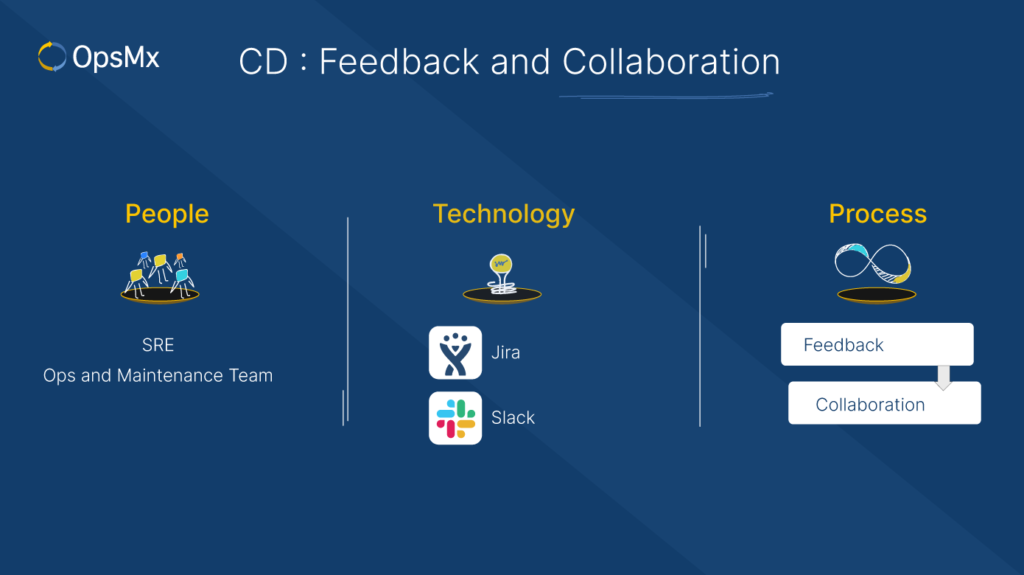

3. CD-Feedback and Collaboration

- People: SREs, Ops, and Maintenance Team

- Technology: JIRA, ServiceNow, Slack, Email, Hipchat

Process:

One of the primary goals of the DevOps team is to release faster and continuously, and then continually reduce errors and performance issues. This is done through frequent feedback to developers, project managers about the new version’s quality and performance through slack or email and by raising tickets promptly in ITSM tools. Usually, feedback systems are a part of the entire software delivery process; so any change in the delivery is frequently logged into the system so that the delivery team can act on it.

Parting Thoughts

An enterprise must evaluate a holistic, continuous delivery solution that can automate or facilitate automation of these stages, as described above. If you are considering implementing a CI CD pipeline or automating your CICD pipeline workflow, OpsMx can help.

The OpsMx ISD platform leverages cloud architecture that can get you started by implementing a CICD workflow on a cloud server of your choice. The pipeline-as-a-code feature simplifies shifting any on-premise CICD pipeline over to the cloud architecture within minutes.

This is a great article Jyoti! is CD stands for “Continuous Delivery” or “Continuous Deployment”. in the beginning you have mentioned Delivery. also, where does docker/Kubernetes comes into the picture in the above pipeline?

I have added the difference between deployment and delivery to the blog. The docker and kubernetes scenario has been emailed to you.

Quite informative

Thank you

Absolutely Amazing information.

Thank a lot.

Very good article. This article clear the concept of CI/CD.