Differentiating Continuous Delivery from Continuous Deployment

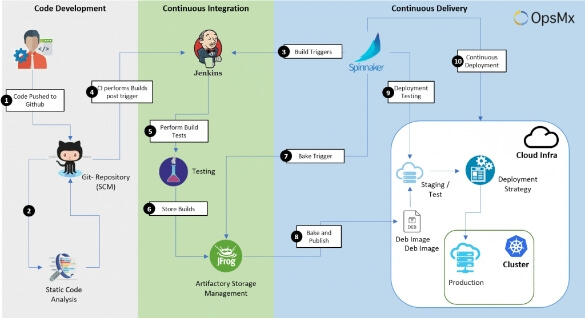

Continuous Delivery is a framework that commences at the end of a Continuous Integration cycle. Continuous Delivery is all about deploying code changes to staging and then to production, post the build. It enables organizations to deploy code to production on an on-demand basis.

Whereas, Continuous Deployment enables DevOps to automatically deploy changes to production. This accelerates the improvement process because developers can verify their code during production at the same hour. So, developers get the chance to test new features as the same code can be rolled back at the same speed that it was deployed into production.

Why was there a need for Continuous Delivery?

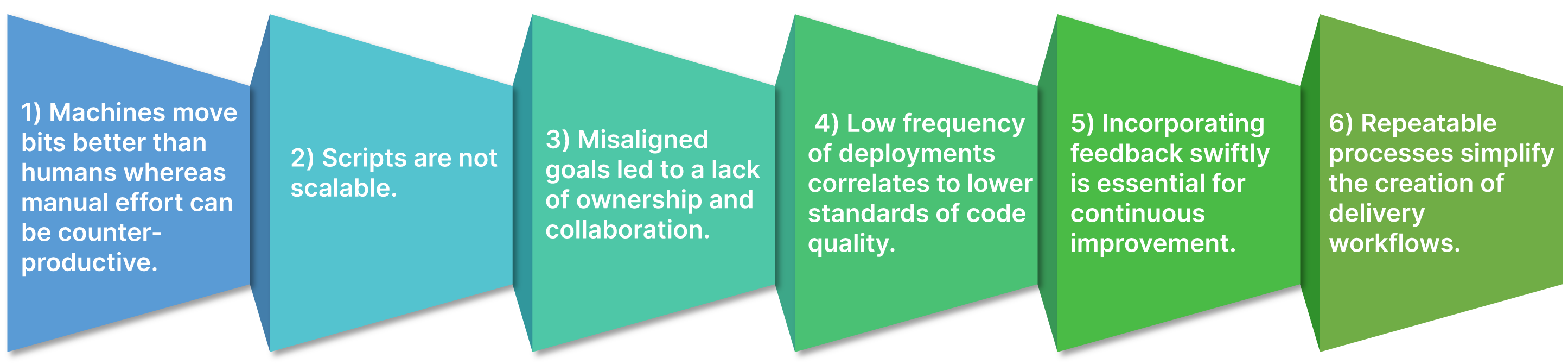

More than a decade ago, engineers and developers partially improved the software delivery process by introducing Continuous Integration. The CI framework formed the stepping stone for immutable infrastructure where developers could push code changes multiple times a day, thus triggering an automated build cycle that takes care of integration issues before the change reaches production. Normally after the code was built, it was ready to be deployed into standalone monolithic servers and mainframes. Unfortunately, within this traditional environment, the deployment process was mostly manual and sometimes required scripts. However, with the rise of modern platforms like Kubernetes, the traditional physical machine-based platforms became redundant. Also, the script-dependent delivery processes failed to scale. And distributed topologies made the delivery process even more complex, as they met with a series of challenges that are highlighted below:

1. Machines move bits better than humans whereas manual effort can be counter-productive.

A manual process is highly prone to errors which, in turn, can increase costs. When organizations scale processes, they often fail to keep up with the business expansion. For example, a multinational company operating with a globally distributed workforce (developers) trying to push codes can significantly constrain the delivery process, and eventually, may lead to a process breakdown.

2. Scripts are not scalable.

Previously, point automation leveraging scripts improved efficiency only to a certain extent. However, when organizations moved from the monolithic architecture of mainframes and on-premise servers to a microservices production environment, the scripts weren’t able to cope with this transformation. To bridge the gap that scripts had created, the concept of an automated continuous delivery pipeline was formulated. It guarantees that the code flows to its destination at the push of a button.

3. Misaligned goals led to a lack of ownership and collaboration.

Successful deployment of code into production is a goal; not only for SREs but also for developers. As such, the entire DevOps team is responsible for a code failure at production. But in reality, stakeholders fail to own the responsibility in the software delivery process, which results in precious time lost due to blame games and firefighting.

4. Low frequency of deployments correlates to lower standards of code quality.

Breaking down a big project into smaller tasks is the surefire way of accomplishing things. Similarly, in the context of software delivery, failing to push frequent updates can create a backlog. There are a million different things that can go wrong and compromise the quality of the code. So, doing things in bite-size makes things easy to manage and quick to troubleshoot.

5. Incorporating feedback swiftly is essential for continuous improvement.

Feedback from end-users of a product highlights underlying issues or improvements. These feedback when incorporated as soon as they are received can improve customer engagement, and ensure they do not switch to competitor applications. This cycle, when repeated, is called continuous improvement. Thus, it is crucial to avoid delays between feedback incorporation and feedback received as it will impact customer satisfaction.

6. Repeatable processes simplify the creation of delivery workflows.

Releasing code must be simple and easy. Templatizing pipelines empowers DevOps to configure and set up pipelines quickly while still ensuring security and compliance policies. Also, it streamlines the management of hundreds of pipelines through a management tool.

What value does Continuous Delivery offer?

High-performance teams equipped with the CD framework can achieve outstanding results to their counterparts who are not using a continuous delivery framework. Organizations looking to gain an edge over their competition must adopt the best practices of continuous delivery.

1. Reduced risk associated with releases

Continuous delivery frameworks enforce a template process that is repeatable and automated. This reduces the risks associated with software deployments and makes it a straightforward process. Developers become confident to push updates anytime and on-demand. Thus, with advanced deployment strategies, continuous delivery has eliminated the chances of encountering errors in production.

4. Reduced costs

Traditionally, delivering updates was an arduous task. But investing in an automated deployment pipeline can substantially reduce the cost of delivering updates throughout the lifetime of the product. Additionally, teams can easily achieve this by eliminating the fixed overhead costs.

2. Faster GTM

Code not delivered is money burnt. Traditionally, software delivery would extend anywhere between weeks or months. For instance, updating the operating system a decade ago was a mammoth task when compared to the present times. Testing, provisioning, and deploying updates daily automatically and repeatedly helps teams to get the products in the hands of customers in the shortest time possible.

5. Customer-centric products

Equipping development teams with a CD framework helps them to work in small batches. This smaller deployment cycle means developers get customer feedback as soon as they release an update. Moreover, by actively engaging with users, teams can observe the outcomes of their updates first-hand. Hence, this step-by-step incremental update process with a feedback loop ensures to address customer concerns right out of the gate.

3. Better quality software

Automating the delivery process enables the engineers and developers to focus on what they do best, write code. Thus, developers can rigorously perform security, testing, and performance checks to troubleshoot errors right at the beginning of the deployment pipeline. Not only does this save time and ensure high-quality code updates but also results in better quality products.

6. More confident teams

Continuous delivery frameworks simplify the software delivery process. Deployment strategies, such as Canary work without demanding a heavy toll on infrastructure, boosting developers’ confidence to experiment and innovate more. Still, some SREs struggle with a slow pipeline even after implementing continuous delivery. This can be happening due to multiple reasons. Interested? Read the blog on tackling slow pipelines to learn more.

Are you looking for faster growth?

We can get your first automated pipeline running in no time.

How do I get started?

Before initiating a continuous delivery transformational journey, you might think that the journey will be smooth. However, in reality, there will be multiple challenges along the way. Analyzing one’s journey will throw light on an organization’s current bearings. Moreover, it may open the possibilities of potential areas that need improvement.

If you would be interested in learning how to assess your CD journey and if you are on the right track, you can read our blog “Is your DevOps Journey heading the right way?”

In the CD journey, we are going to rely heavily on measuring metrics that reveal critical outcomes and ensure that we deliver software and services quickly and reliably. These metrics are essential to make a convincing case for transforming a continuous delivery platform, as it offers a significant improvement qualitatively and quantitatively on business outcomes. Understand how you can forecast the ROI of your transformative continuous delivery journey by analyzing the essential metrics prescribed in the DORA foundation.

Which Continuous Delivery tool should I select and why?

Most tools in the market boast of bringing out the desired outcome that is expected from a continuous delivery implementation. As discussed earlier, continuous delivery is a framework with multiple moving parts. Over the years, most organizations have tried to align to the desired continuous delivery state by implementing disparate tools to improve efficiency. But this amalgamation of multiple tools in an interconnected pipeline delivery introduces security threats and is not scalable on demand. So, it is important that businesses choose a platform that will tick all their requirements in one integrated platform.

The top things that businesses must look out for while selecting a Continuous Delivery platform are:

- Easy setup and configuration

- Visibility and collaboration

- Software integration and extensibility

- Build and deployment environment support

- Security and compliance

- Workflow flexibility

- Performance, uptime, and scalability

The OpsMx Enterprise for Spinnaker not only checks all the boxes but also provides an extra layer of intelligence called Autopilot. By leveraging Machine Learning algorithms, Autopilot offers a risk-free and seamless deployment experience. And if you are just getting started with your CD pipeline journey, no need to fret! Our expert engineers at OpsMx can help you implement it in less than 15 minutes. Additionally, OpsMx offers 25+ sample pipelines for free so that any organization can get started with deploying into their target environment from Day-1 of operation. Excited?

Get your 25 free Sample Pipelines

OpsMx is

Trusted By

Keep up to date with OpsMx

Be the first to hear about the latest product releases, collaborations and online exclusive.