Most organizations today deploy their software changes into production rapidly. But organizations want to use progressive delivery mechanisms such as canary to roll out their changes and features, taking calculated risks. They want to expose their new software to a small portion of the production traffic, analyze if the software is behaving in an expected way, and then gradually release the software to the whole traffic.

As the software is increasingly deployed into Kubernetes, Argo Rollouts became a wide-popular tool to implement canary quickly. For splitting the traffic in real-time, though it can be done by simple ingress, we will consider Istio service mesh for our blog. Istio is a widely known service mesh and is getting adopted faster among enterprises.

Note: Argo Rollouts work well with load balancers and almost all the NGINX controllers.

This blog will show how to implement an automated canary to application subsets (v1 and v2) using Argo Rollouts and Istio and OpsMx ISD for Argo.

Before we begin

A crude way of implementing a canary strategy is to consider the following 3 steps:

- Configure network: Configure the Istio gateway to control the incoming traffic and direct to an application (stable and canary versions)

- Configure deployment agent: Configure Argo Rollout and set rules to release a new version to production using canary deployment.

- Configure analytics software: Apply AI/ML to automate the verification of a canary before a gradual increment of traffic.

Configure Istio to split traffic among application subset

The process of exposing a small subset of users or traffic to a new application version (to be referred to as canary) while allowing the major traffic to the older version is called canary deployment. Once the performance and quality of the new version are validated and verified, more traffic will be allocated, and eventually, the new version will replace the old version; this is called the canary deployment strategy.

Istio provides the sophisticated traffic splitting for canarying, and images are given below:

The traffic splitting in the run-time can be achieved by Istio Gateway and Virtual Services (CRDs provided by Istio). The sample yaml file for Istio ingress gateway and virtual service would look like the below. Please note that in this case, the Virtual service rule is set to split the incoming traffic into 90% and 10%, where 90% is allowed to the baseline version of the sample-application and 10% is allowed to the new version (canary).

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: canary-gateway

spec:

selector:

istio: ingressgateway # using Istio IngressGateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

Sample virtual service yaml file for specifying the traffic splitting rules. ( It will be updated by Argo Rollouts as it natively integrates with Istio).

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: rollout-vsvc

namespace: ar-ns

spec:

gateways:

- canary-gateway

hosts:

- sample-application

http:

- name: primary

route:

- destination:

host: sample-application

subset: canary

weight: 10

- destination:

host: sample-application

subset: baseline

weight: 90

Destination rule in Istio is used to define the different versions of the same applications that can be used in the Istio virtual service.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: rollout-ds

namespace: ar-ns

spec:

host: sample-application # interpreted as sample-application.ar-ns.svc.cluster.local

subsets:

- name: baseline

labels:

version: baseline

- name: canary

labels:

version: canary

Istio can split the traffic. But an agent needs to update the traffic percentage based on some analysis. Argo Rollouts plays the role of the agent.

Let us now understand the Argo Rollout configurations.

Configure Argo Rollouts to deploy Canary and rules for analysis

The Argo Rollouts is a K8s controller that offers a set of CRDs to implement canary deployment. Argo Rollouts would integrate with the ingress controller ( in our case, Istio) to gradually achieve the run-time traffic splitting ability to shift the traffic to the new version. Argo provides a workload resource called Rollouts (read the specification) for deploying applications into Kubernetes. Note: Rollout is an extension of the Deployment/ReplicaSet resource in Kubernetes.

Argo Rollouts can query and interpret metrics from various providers to verify key KPIs and drive automated promotion or rollback during an update. For that, it provides another resource called AnalysisTemplate to define how to perform a canary analysis.

You have to have a service to deploy the sample application. ( Note: we will use the Argo Rollouts resource instead of the deployment resource in K8s to implement Canary). As you can see, the Service resource refers to sa-argo-rollout, a Rollout resource.

apiVersion: v1

kind: Service

metadata:

name: sample-application

labels:

app: sa-argo-rollout

spec:

selector:

app: sa-argo-rollout

ports:

- port: 3100

targetPort: 8088

type: ClusterIP

Below is an example of the Rollout resource called sa-argo-rollout. The intention here is to create 2 pods of issuegen (v1.2.5), representing the stable (or baseline version).

The Rollout resource will update the virtual service with 20% of the traffic to the canary and will run the analysis for 31 seconds. And would pause for analysis to check if the canary is performing well. If it does, then it would again update the virtual service to allow another 20% (total 40%) of the traffic to the canary.

Under the canary strategy specification, we have mentioned that each time there is a new canary deployment, the sa-argo-rollout resource would invoke isd-analysis-job, an Argo Rollout Analysis resource.

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: sa-argo-rollout

spec:

replicas: 2

revisionHistoryLimit: 2

selector:

matchLabels:

app: sa-argo-rollout

template:

metadata:

labels:

app: sa-argo-rollout

spec:

containers:

- name: rollouts-baseline

image: docker.io/opsmxdev/issuegen:1.2.5

imagePullPolicy: Always

ports:

- containerPort: 8088

strategy:

canary:

trafficRouting:

istio:

virtualService:

name: rollout-vsvc # required

routes:

- primary # optional if there is a single route in VirtualService, required otherwise

destinationRule:

name: rollout-ds # required

canarySubsetName: canary # required

stableSubsetName: baseline # required

steps:

- setWeight: 20

- pause: { duration: 31s }

- experiment:

analyses:

- name : isd-analysis-job

requiredForCompletion: true

templateName: isd-analysis-job

templates:

- name: baseline

specRef: stable

- name: canary

specRef: canary

All the logic to analyze metrics and logs of the canary with the baseline version is defined in the Analysis template.

Configuration of Analysis Template in Argo Rollouts for canary analysis

The Argo rollouts analysis template resource would be something like the one below. Ideally, you can mention the traffic upgradation rules, conditions, and analysis logic. In this case, we have invoked opsmx11/verifyjob:v5 to perform the verification of the canary using AI/ML in ISD for Argo. The idea here is to increase the traffic to the canary release only if the analysis score is above 60. Else the Analysis Template would fail the canary deployment and delete the newly created deployment pods.

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: isd-analysis-job

spec:

metrics:

- name: isd-analysis-job

provider:

opsmx:

gateUrl: https://ds312.isd-dev.opsmx.net/

application: sample-application

user: admin

lifetimeHours: "0.05"

threshold:

pass: 80

marginal: 60

Automated verification of canary OpsMx ISD for Argo

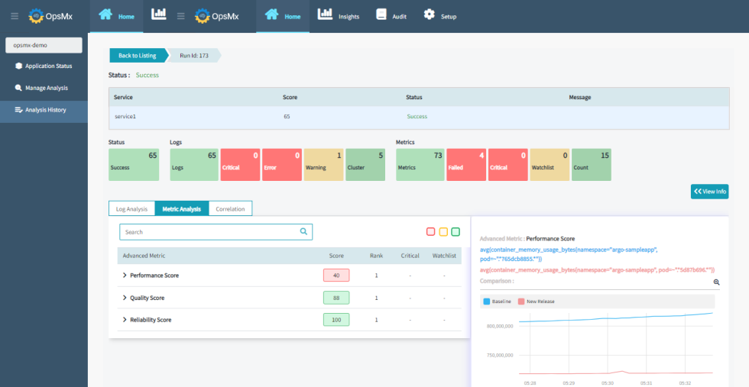

OpsMx ISD for Argo provides the intelligence for CI/CD processes to deliver software safely and securely. OpsMx ISD for Argo uses AI/ML to analyze logs, metrics, and other data sources to identify the risk of all changes, automatically determining the confidence that an update can be promoted to the next stage of a canary deployment. Checkout the data-sources OpsMx ISD integrates with out of the box. ISD for Argo determines individual risk scores for quality, performance, reliability, and security. Refer to the image below.

Splunk, Sumo Logic, Appdynamics, Prometheus, New relic, Graphite, elasticsearch, Dynatrace, etc for logs and metrics, but it can also perform both log and metric analysis at the same time and give out a score 0 to 100, and highlight the risk of a release in production. If there are any problems in the new application in the show, such as latency issues or SQL connection issues, etc., it can be quickly rolled back.

Check the complete video on how ISD for Argo performs the automated verification when apps are deployed into Kubernees using GitOps style in Argo CD and Argo Rollouts.

Video on automated canary analysis using OpsMx ISD for Argo CD and Argo Rollouts

Conclusion

After analyzing a release, ISD for Argo sends back the results, and Argo Rollouts decides to either abort or progress the release. The best part is not that ISD for Argo can fetch logs and metrics from many tools, such as Deploying canary is an essential feature in software delivery for almost all the DevOps teams across the industries to release their product quickly and without risks. However, enterprise applications can have multiple dependencies between microservices, various network load balancers, cloud infrastructure, and security requirements. So canary deployment strategy should be implemented with careful consideration and best practices.

Talk to one of our CI/CD experts to implement canary deployment strategies using Argo CD or Spinnaker.

About OpsMx

Founded with the vision of “delivering software without human intervention,” OpsMx enables customers to transform and automate their software delivery processes. OpsMx builds on open-source Spinnaker and Argo with services and software that helps DevOps teams SHIP BETTER SOFTWARE FASTER.

0 Comments