Quality of Software Releases is Critical to Todays Business Survival

“Speed thrills but kills”. Significant loss is incurred by companies in almost all sectors whenever they want to deliver applications with speed. Bad Quality of Software leads to Loss of Customers, Production failures, and Financial Loss as shown below:

- Companies loose about 50M USD in sales within 2 hours due to software failure, according to Bloomberg.

- Aviation industry booked a loss of 400B USD due to application complexities in 2019, according to Test Magazine.

- According to Global Banking and Finance Magazine, 80,000 customers switched banks that attributed to a loss of 433B USD, and the reason cited as the urgency of banks to hit the market with new applications.

OpsMx Autopilot, a Continuous Verification (CV) platform, that can verify software release at every stage of software delivery. The continuous verification platform is also an integral part of our OpsMx Enterprise for Spinnaker and works with all CI and CD tools. Autopilot offers machine learning and context built over SDLC to improve the quality of delivery. Enterprise can use the verification platform to perform risk assessment at all CI/CD stages- Build, Test, Deploy and Prod stage. Shifting verification to the left of CI/CD can radically bring down application complexities, sincerely cost less and positively impact deployments. This blog is about continuous verification of application in the Testing stage only. We think that this topic of continuous verification for the test stage will be a cynosure for audiences who aim to keep their software complexities under control. What will further interest them is the idea of instilling intelligence into software testing and swiftly delivering risk-free applications into the hands of end customers.

1. Challenge: Complexity of Testing Software increases exponentially with speed

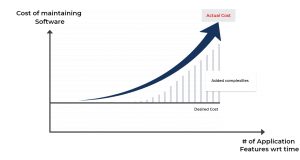

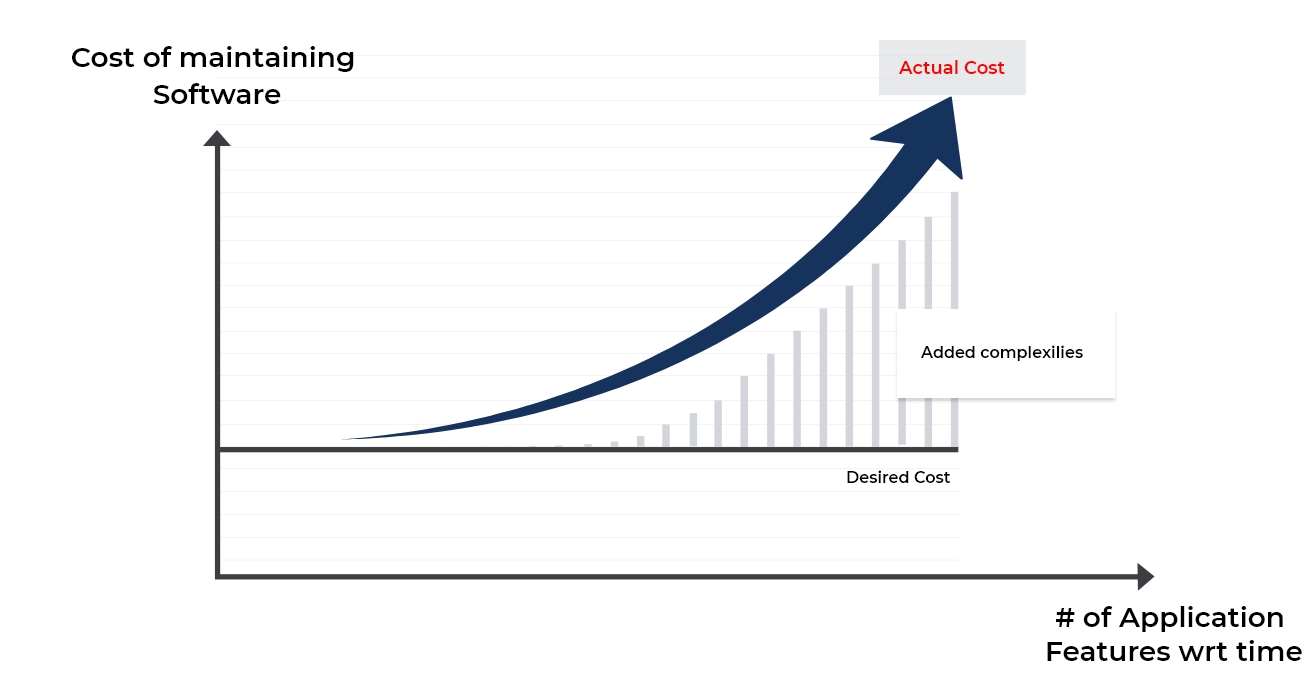

Enterprises are increasingly using test automation to reduce the time to test the software before releasing it into production, explains Arnon Axelrod in his book Complete Guide to Test Automation. Since the advent of CI/CD, the ability to release features faster has increased exponentially. While delivery speed is achieved, the time allocated for software testing has steadily declined. Due to a brief time, it is challenging for teams to release new features without breaking the application or making the diagnosis of failed test cases a costly & time-consuming process. In practice, the situation gets more damaging with time. Without proper testing, the software complexities increases proportionally to the number of features (or user stories) created. Consequently, due to the rise of software complexities, the cost of maintaining the software rises exponentially with time. [Cost of maintaining the software is directly proportional to the time taken to test the whole software release plus the time taken to resolve and retest bugs. ] The graph below shows how added complexities increase the Cost exponentially, and it becomes difficult to maintain software over time:

1.1 Why is Complexity increasing?

The following complexities rise over time with the debut of new features:

- Input:

- Test scenarios, to test the functionality of an application to ensure no accidental complexities are introduced.

- Test cases, to determine the viability and performance of each feature in an application

- Output:

- Accordingly, there’s a rise in the volume and velocity of test logs. These Logs imprints the testing process, test files, output flags like alerts, warnings, and exceptions with a timestamp.

A test log would look something like the below:

1.2 Economic Impact of Complexity in Testing

- Employees Productivity:

- Testers spend hours per day investigating hundreds of accumulated test results to verify the business value, identify regressions and defects. The burnout from repetitive and non-core activities drains their productivity and efficiency to perform their core activities.

- Time to Market:

- If the feedback does not reach the developer in time, they need a significant context switch from a new work they have already started. Bug fixes can take a lot of time, and release dates are likely to be missed.

- Organizational Investments:

- Investments in test automation are not worthy of the time spent on investing test results is greater than the time saved by running automated tests.

2. Solution: ML-based Continuous Verification to Reduce Cost & Complexity of Testing

To overcome such challenges, OpsMx has developed Autopilot, an AI/ML-powered Continuous Verification platform that helps testers rapidly find the errors in failed test cases, shortening the feedback loop, and time to market. Key features you get from Autopilot are:-

- Test Assessments: Autopilot continuously analyzes logs from test automation tools in real-time, providing a consolidated status of test results and risks, and saving teams the time and effort associated with manual monitoring of test results. Refer to the below image where Autopilot highlights risk scores of test cases using machine learning on errors, critical failures, etc.

- Automated Decisioning: Integrate Autopilot with your CI/CD deployment pipeline to automatically notify all stakeholders about failed release candidates needing attention and successful release candidates ready for deployment and subsequent release. Autopilot also optimizes test runs in real-time, parses logs emanating from test-automation tools during a test run, and predicts the failure of test cases in the first few minutes of the execution. Based on the prediction, it can automatically stop the test run, saving hours of testers’ time and productivity.

- Test Diagnosis and Triage: Autopilot’s AI and ML-based algorithms enable teams to quickly and easily identify the reasons for failing test cases. By processing hundreds of thousands of test logs, Autopilot filters out the noise, recognizing the critical errors (refer to the image below) in any scenario and uncovering false negatives missed by automated testing tools.

- Test Visibility and Insights: Autopilot provides an intuitive UI with a graphical representation of the success and failures of previous releases, increasing visibility, and collaboration among dev, ops, and infrastructure teams. The cluster graph below represents critical errors and historical data on a pass/fail analysis:

3. Customer Case Studies & Realized Benefits

The following are benefits realized by large enterprise customers.

- Enhance Tester Productivity and Decrease App Complexities:

- Autopilot reduces the manual time to investigate failed test cases by 90%, freeing up testers’ to build testing strategies, develop executable specifications, and perform more exploratory testing. Consequently, with intelligence verification processes in the testing phase, applications will have almost zero complexities introduced.

- Deliver Software Faster and Safer:

- Autopilot shortens the feedback loop with faster investigations and diagnosis of the root cause of failed test cases in a few mins, enabling developers to fix bugs in their code and commit quickly, reducing cycle time, and enabling continuous and planned deployments.

- Gain Confidence:

- With automated real-time monitoring of test logs, Autopilot displays status and risks using intuitive dashboards that increase the confidence of product managers and DevOps engineers on various software releases.

Using Autopilot, enterprises can continuously verify their application without degrading testers’ productivity and also can now ship applications faster with zero complexities. For customer case studies please contacts us. If you want to learn more or request a demo, please book a meeting with us. You can also simply get a free trial to explore the power of Autopilot test verification.

OpsMx is a leading provider of Continuous Delivery solutions that help enterprises safely deliver software at scale and without human intervention. We help engineering teams take the risk and manual effort out of releasing innovations at the speed of modern business. For additional information, contact us.

0 Comments

Trackbacks/Pingbacks