Using MySQL for Spinnaker microservices- Orca and Clouddriver

Spinnaker uses Redis as the data store for all its microservices by default. In this blog, we are going to demonstrate how to configure MySQL as the datastore for Orca and Clouddriver microservices in an Openshift environment without any downtime. This is also applicable to any Kubernetes environment.

We have been maintaining a number of enterprise Spinnaker infrastructure footprints over time exposed to a number of scaling challenges with Clouddriver and Orca’s original Redis-backed caching implementation. For example, with time we realized with a large number of applications & pipelines deployed we need to rely on a database built for persistence and indexing for faster access to data structures.

Setting up MySQL in Spinnaker

Running MySQL pod on Openshift has its own challenges as one needs to run them as a non-root user. We are going to configure two MySQL deployments along with its service and configMap for Clouddriver and Orca.

The cloud driver deployment won’t have any persistence storage for it. But for Orca, which is responsible for maintaining all the activities and history of pipeline execution, a persistent volume (PV) will be assigned. Clouddriver would be switched over to MySQL as we don’t have any dependencies on old data in it.

For Orca we would be using Dual-Task Repository i.e. all the new executions would be going to MySQL and all the historical executions would be fetched from Redis. We tested MySQL 5.7 with Orca and Clouddriver.

Openshift Image: “docker pull registry.access.redhat.com/rhscl/mysql-57-rhel7”

Kubernetes Image: “docker pull mysql:5.7”

These are the steps that we would be following in the blog:

- Step 1: Configure MySQL deployment with service, ConfigMap & PVC for cloud driver, and Orca (PVC would be configured only for orca for data persistence).

- Step 2: Configure the Database and users for cloud driver and orca respectively.

- Step 3: Configure the cloud driver-local.yml and orca-local.yml with the updated MySQL settings and do a “hal deploy apply” with the service names.

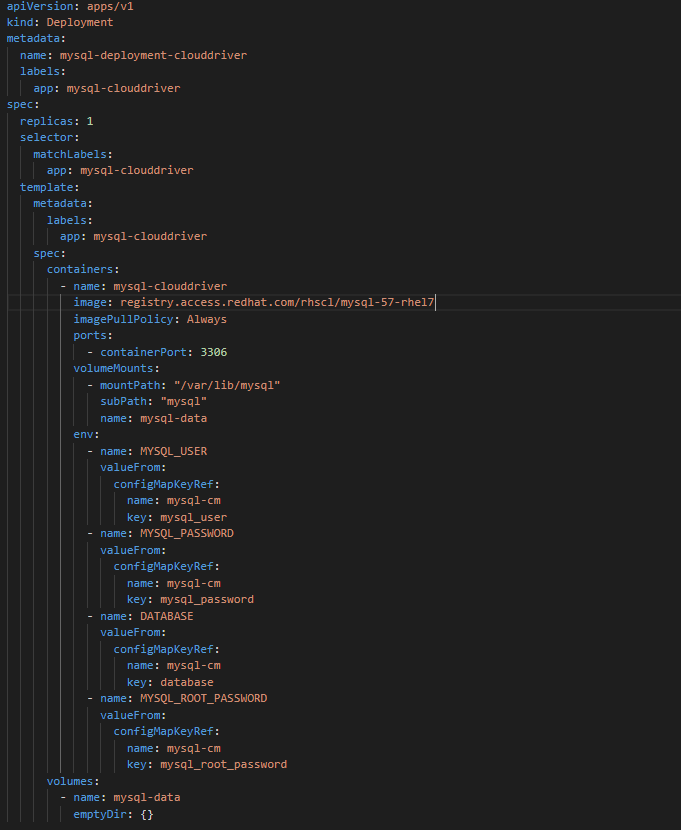

Configuring MySQL deployment for Spinnaker Clouddriver

This is how the deployment for cloud driver looks like, we don’t use a PVC for it. We are not interested in storing the data for the cloud driver:

Configuring MySQL deployment for Spinnaker Clouddriver

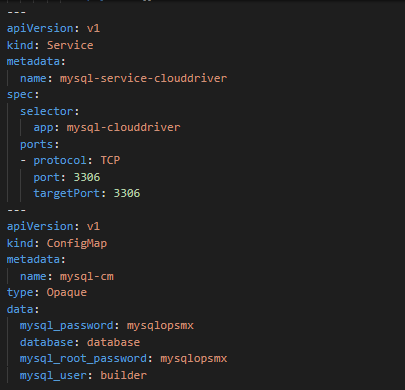

The service and Config Map would look like this:

MySQL service for Spinnaker Clouddriver

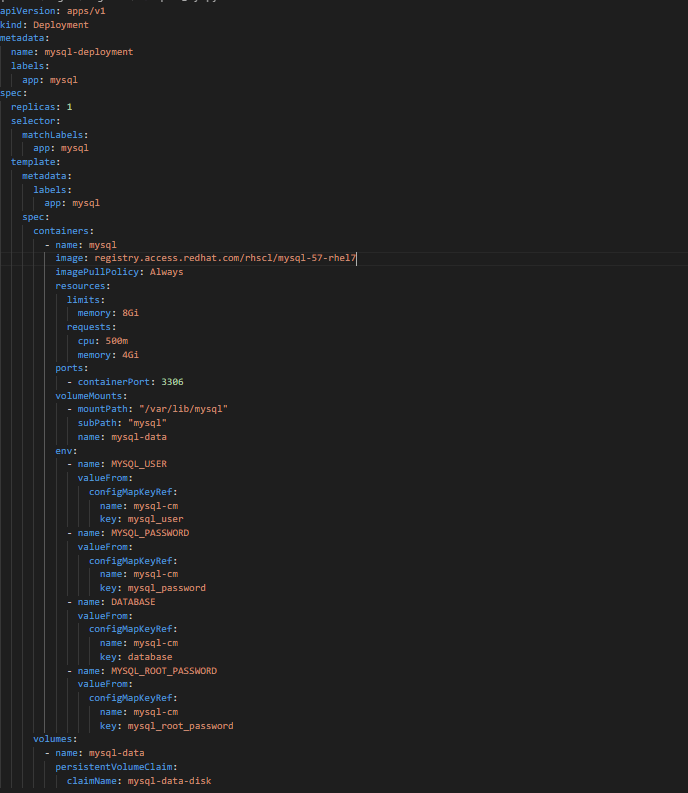

Configuring MySQL deployment for Spinnaker Orca

We configure the MySQL deployment of Orca similar to Clouddriver but we are going to use a PVC to store the Database to avoid data loss. We do backup the MySQL DB to ensure we don’t incur any data loss.

Configuring MySQL deployment for Spinnaker Orca

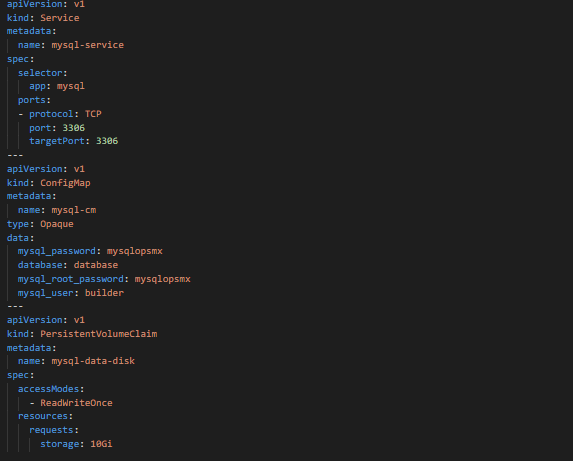

The service, PVC, and the configMap:

MySql service, PVC, and the configMap for Spinnaker Orca

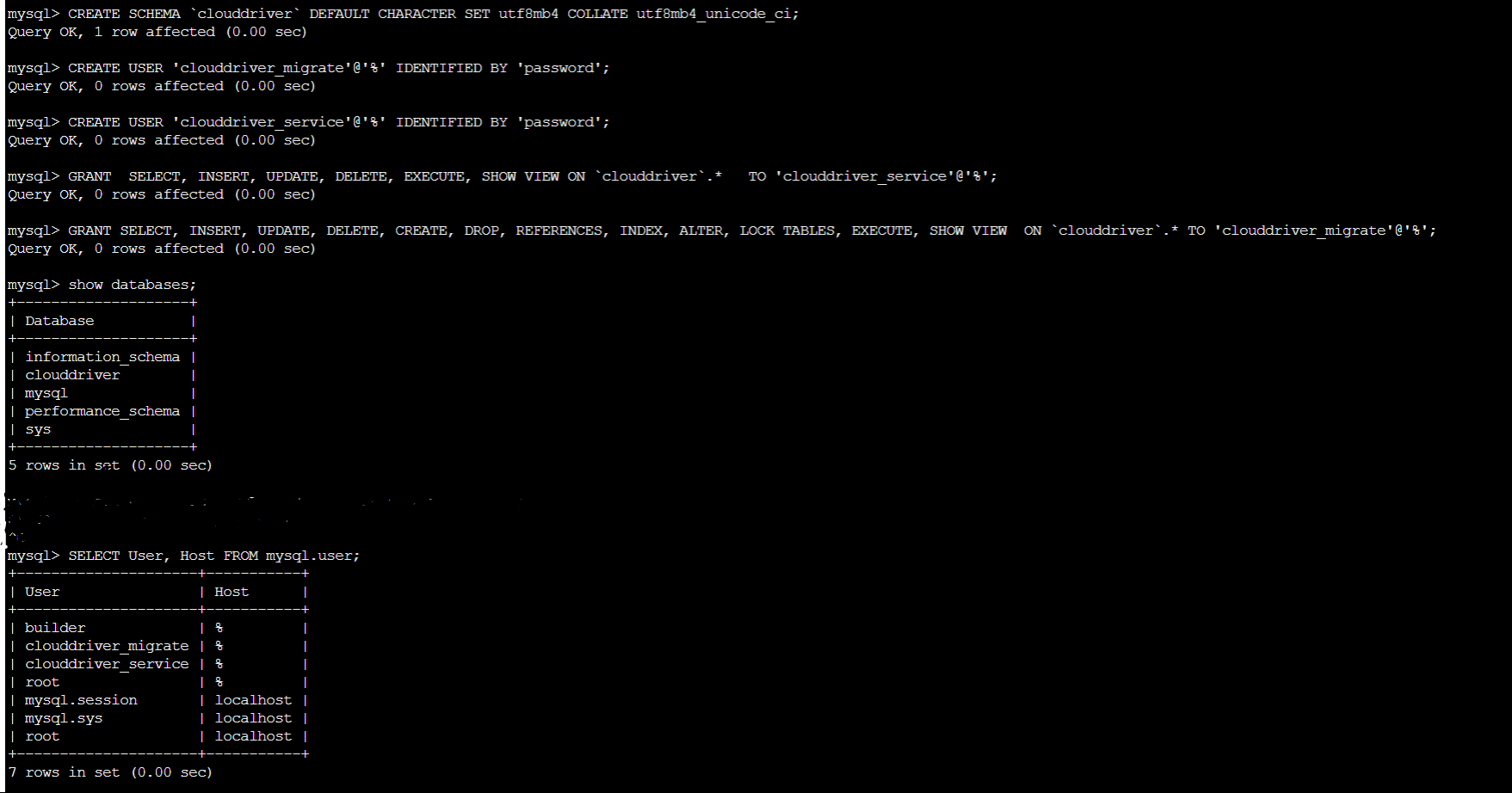

Configure the MySQL Database and Users for Spinnaker Clouddriver

Once the pod is up and running, you need to execute the following commands: Login via root as one needs to create the user via root :

# MySQL -u root -p$MYSQL_PASSWORD -h $HOSTNAME $MYSQL_DATABASE

Once you are in the prompt, the first step is to create a Database called “cloud driver” and then create two users the clouddriver_service & clouddriver_migrate which would be given the permissions to access it.

# mysql> CREATE SCHEMA `cloud driver` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

# mysql> CREATE USER ‘clouddriver_service’@'%' IDENTIFIED BY 'password';

# mysql> CREATE USER ‘clouddriver_migrate’@'%' IDENTIFIED BY 'password';

# mysql> GRANT SELECT, INSERT, UPDATE, CREATE, DELETE, EXECUTE, SHOW VIEW ON `cloud driver`.* TO 'clouddriver_service'@'%';

# mysql> GRANT SELECT, INSERT, UPDATE, DELETE, CREATE, DROP, REFERENCES, INDEX, ALTER, LOCK TABLES, EXECUTE, SHOW VIEW ON `cloud driver`.* TO 'clouddriver_migrate'@'%';

Configure the MySQL Database and Users for Spinnaker Clouddriver

You can see that the user’s clouddriver-service & clouddriver_migrate has been created with their passwords. Also, you can view the Database “clouddriver” being also configured

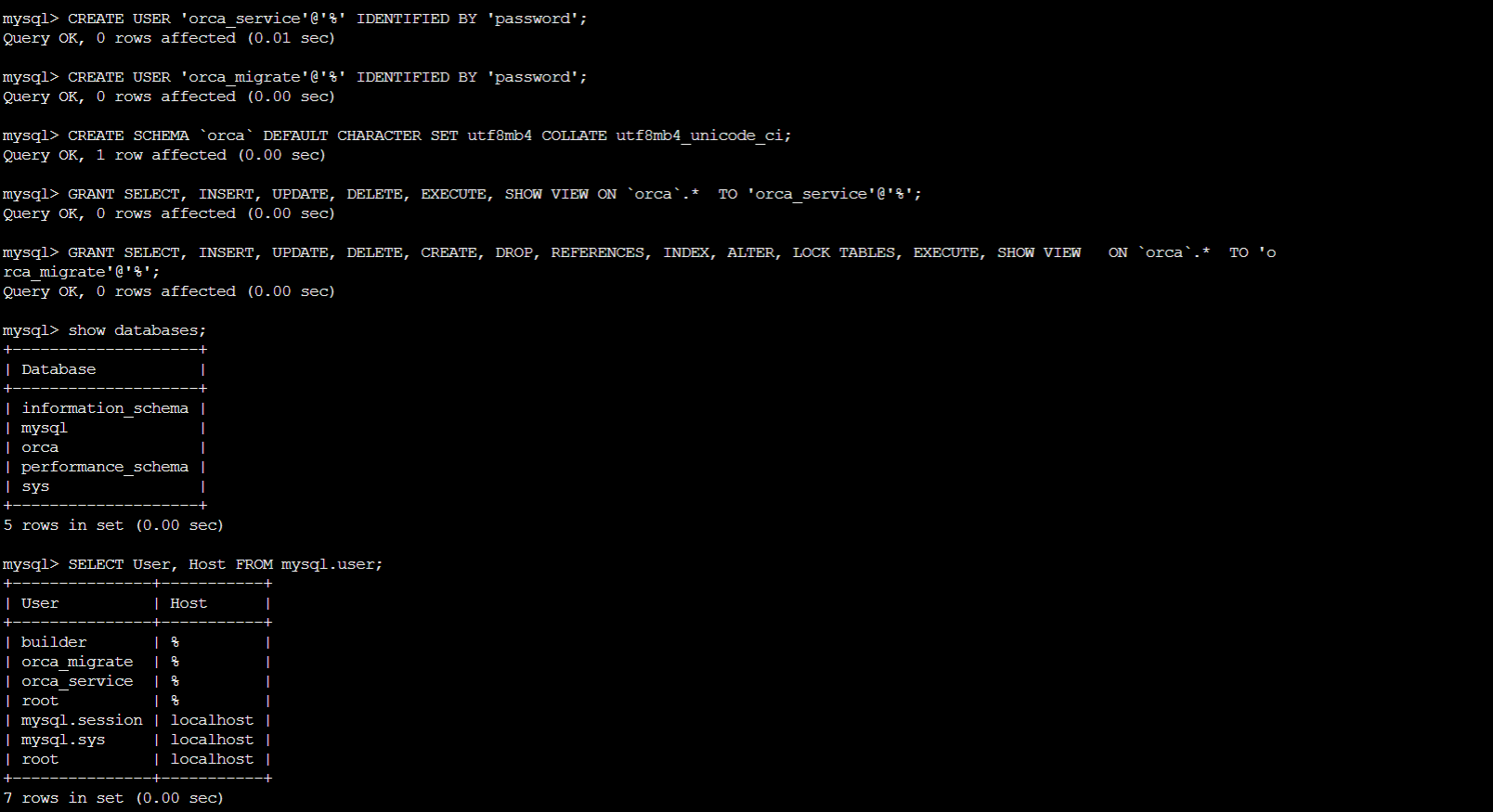

Configure the MySQL Database and Users for Spinnaker Orca

# mysql> CREATE SCHEMA `orca` DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

# mysql> CREATE USER ‘orca_service’@'%' IDENTIFIED BY 'password'; # mysql> CREATE USER ‘orca_migrate’@'%' IDENTIFIED BY 'password';

# mysql> GRANT SELECT, INSERT, UPDATE, DELETE, EXECUTE, SHOW VIEW ON `orca`.* TO 'orca_service'@'%';

# mysql> GRANT SELECT, INSERT, UPDATE, DELETE, CREATE, DROP, REFERENCES, INDEX, ALTER, LOCK TABLES, EXECUTE, SHOW VIEW ON `orca`.* TO 'orca_migrate'@'%';

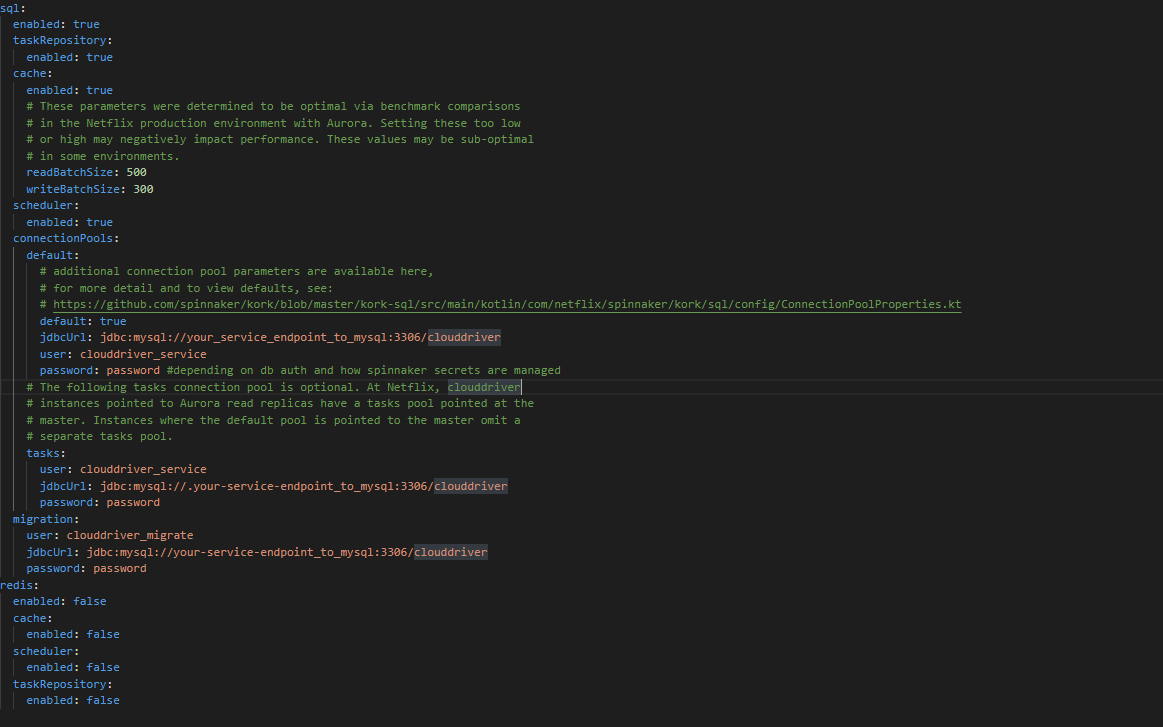

Configuring the clouddriver-local.yml to point to MySQL from Redis

Once you have configured the MySQL Database and the users we need to edit the clouddriver-local.yml to point to MySQL and switch over from Redis. Since we had running cloud driver instances in HA mode, we updated in the ~/.hal/default/profiles/clouddriver-local.yml

Configuring the orca-local.yml to apply Dual Execution Repository

Once you have configured the MySQL Database and the users we need to edit the orca-local.yml to point to both MySQL and Redis as we are using Dual Execution Repository. So, all the newer executions would go to MySQL and all historical executions can be retrieved from Redis. The next step similar to cloud driver, for orca we updated in the ~/.hal/default/profiles/orca-local.yml Once you have configured the cloud driver-local.yml and orca-local.yml you need to do a “hal deploy apply” with the service names of cloud driver and orca.

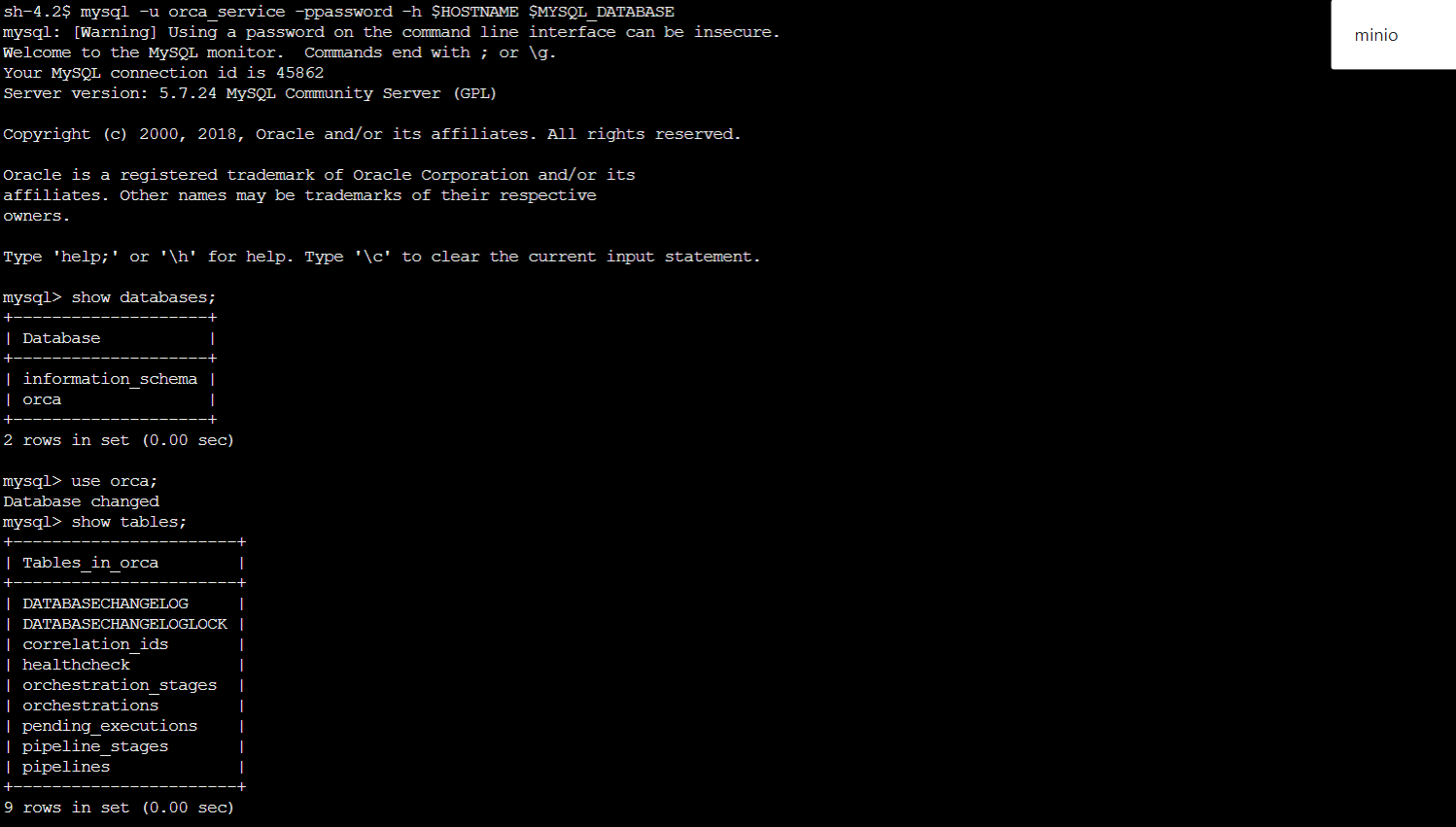

Verification that new pipelines are going to the MySQL

Login into the MySQL Orca pod and then in the terminal you verify that on the pipelines tables on the orca DB we have the recent 9 pipelines we had triggered:

Conclusion

We have shown how to configure MySQL backend for Spinnaker Orca and Clouddriver. As you can see it is fairly simple.

If you want to know more about the OpsMx Enterprise Spinnaker or request a demonstration, please book a meeting with us.

OpsMx is a leading provider of Continuous Delivery solutions that help enterprises safely deliver software at scale and without any human intervention. We help engineering teams take the risk and manual effort out of releasing innovations at the speed of modern business. For additional information, contact us

0 Comments