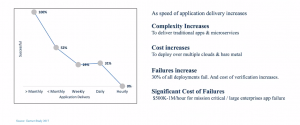

“Faster Releases leads to increasing Failures” – Gartner Study, 2017

According to a 2017 Gartner Study, it was found that as organizations try to increase their speed of delivery of software applications the failures increased significantly. Failure rates increased when those organizations tried to increase the delivery frequency from monthly to weekly/ daily or hourly releases. The cost of failed deliveries is also significantly high. The cost attributed to failure at production deployment stages is also much higher than those at testing stages.

Gartner Study, 2017 on Continuous Delivery studying Deployment Success Rates vs Frequency of Application Delivery (Speed)

Here is a video on the same topic:

Speed vs Failures

As the speed of application delivery increases:

- Complexity Increases – To deliver traditional apps and microservices

- Cost Increases – To deploy over multiple clouds & bare metal

- Failure Increases – 30% of all deployments fail. And the cost of verification increases.

- Significant Cost of Failures – $500K – 1M/hour for mission-critical / large enterprise applications failure

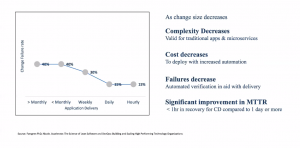

Moving forward, now with the adoption of a microservice-based architecture, cloud environments, and automated delivery platforms like Spinnaker, there is a significant improvement in the way small changes to the applications are being delivered continuously to target environments. Delivering small changes frequently in iterative short-cycles using Continuous Delivery (CD) has led to significant improvement in success rates. It has decreased the cost and improved the mean-time-to-recover (MTTR).

Gartner Study, 2017 on Continuous Delivery studying % Change in Success Rates vs Frequency of Application Delivery (Speed)

Smaller Deployments may not be Enough

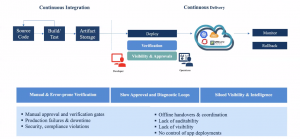

Let us have a look at the current delivery mechanisms. They are generally organized as Continuous Integration (CI) and Continuous Delivery (CD).

- In the Continuous Integration (CI) phase the code is checked-in, followed by build, perform tests (unit tests, static code analysis) and the artifacts are then forwarded to the CD.

- The steps in the Continuous Delivery (CD) phase include deployment verification, dynamic code analysis, bringing visibility to make the approvals, and then deployment to production using some deployment strategies.

In each of these stages, manual approval and verification gates are placed to make decisions on whether to promote the artifacts to the next stage or not. Manual handoffs by different persons at different times produce different results and inconsistencies.

Hence there is significant room for improvement by adopting smarter deployment strategies for doing Continuous Delivery.

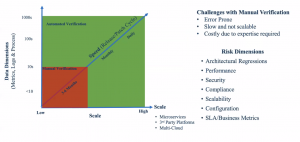

Challenges with Manual Verification

Verification is error-prone, slow, and costly

What we have realized from our experience is the CI/CD process gets affected by the following factors:

- Manual & Error-prone Verification: Manually verifying and approving releases at each stage of the continuous delivery pipeline ( build, test, deploy, and production) is time taking and error-prone. Collecting logs/metrics from different data sources, performing regression, estimating risk, and taking decisions usually takes days. Similarly, security and compliance manager performing security, compliance checks to ensure industry adherence to standards adds to the waiting time.

- Slow Approval and Diagnostic Loops: It takes hours to resolve production failures and downtimes in SLAs; this is usually attributed to the lack of the right performance and quality metrics of new deployments.

- Siloed Visibility & Intelligence: Lack of visibility, coordination and siloed working models hurts the effectiveness of the CI/CD process. Furthermore, audit managers have to wait for days to get the report from project managers to analyze failed deployments or pipeline executions. Modern applications have microservice-based architectures with a large number of services. Individuals auditing them face problems as they might lack the expertise with all of those services. Simply looking at one service that is being promoted does not give them full visibility into the functionality and performance of the whole application in production.

- Costly due to lack of expertise required

Verification is error-prone slow and costly in CI/CD

So all of these factors negatively impact the CI/CD process making it error-prone, costly, and slow with delayed decision making thereby affecting application delivery.

Need for AI-driven Automated Verification

To counter these challenges you need Automated Verification. In a multi-tier service environment, as you are promoting your services it is not just the service you are promoting but you also need to look at the logs and metrics that are being generated. You need to verify them in order to find that everything is fine. But you also want to see the entire ecosystem of the product, check for all the dependencies, and make a decision that the service can move forward.

First, you need to look at the targeted SLA/ business metrics that have been met in order to decide whether to promote the service.

Second, you need to look at the logs of the system. Looking at the logs with systems like Spring Boot will generate a huge amount of log records that you need to verify if there is something that might cause a problem.

You need to measure and analyze all the Risk Dimensions associated with:

- Architectural regression

- Performance

- Security

- Compliance

- Scalability

- Configuration

This is the reason why you need to go for Automated Verification.

Risks Dimensions vs Scale of Application Delivery

OpsMx Autopilot: an AI-driven Continuous Verification platform

Autopilot is an AI/ML-powered Continuous Verification platform that verifies software updates across deployment stages, ensuring their safety and reliability in production.

The continuous verification platform is also an integral part of our OpsMx Enterprise for Spinnaker and works with all CI and CD tools. Autopilot offers machine learning and context built over SDLC to improve the quality of delivery. Enterprise can use the verification platform to perform risk assessment at all CI/CD stages. Let us see how Autopilot works.

Typically in a delivery workflow, you have your artifacts built and go through the integration test, load test. There are gates there depending on the p1 issues that you see or what the functionality is, you decide to promote to Staging. In Staging too there are gates based on the environment and configuration that you will need to check for functional or performance issues. Then you can use the deployment strategies like Canary/ Blue-Green for rolling updates and then decide if a service deployment needs to be rolled back or promoted to production. At each of these gates, you need to look for the digital exhaust (logs and metrics data for all the services) for which you can use a continuous verification platform like Autopilot. Due to automation, verification results are consistent and the decisions taken are auditable. There is learning involved in it that gets codified (ML- Machine Learning).

Autopilot- AI-driven Continuous Verification platform

You can integrate Autopilot into any of your delivery pipelines be it Spinnaker or Jenkins or any other pipeline in use. You can integrate it as a REST service to trigger your analysis as you do your deployments either in build, testing, deploy, or in production. Based on the confidence score you retrieve via API you can find release readiness and take a decision to progress release.

Autopilot plugs into any CI/CD platform

AI/ML behind Continuous Verification

The model for automated verification is very similar to the machine learning models. You observe the data that is coming out from your services like log files from the multiple services, metrics data in terms of SLOs (service level objectives), or system metrics. Based on the data over a period of time you can build a profile for the service. This model is then continuously updated as we deploy new services. When a new service is deployed we can use this model to check for deviations from standards or any classification of the logs that we can report as an error. So based on your observation, a score is finally generated that helps to take automated decisions. You also need to do a deeper classification of the errors to find specific diagnostic information that helps you in MTTR (meantime to repair).

Continuous Verification- Intelligence-driven automation

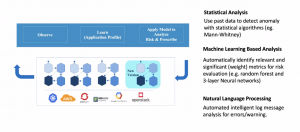

The Continuous Verification process is comprised of the following:

- Statistical Analysis: Using past data to detect anomaly with statistical algorithms e.g. Mann-Whitney

- Machine Learning (ML) based Analysis: Automatically identify relevant and significant (weight) metrics for risk evaluation e.g. random forest and 3-layer Neural networks.

- Natural Language Processing (NLP): Automated intelligent log message analysis for errors/warnings.

Watch the demo here:

Using Autopilot gives you an added benefit for these instances where you can verify your deployment without having to do any additional coding. It gives you higher confidence to promote your software artifacts into production.

If you want to know more about the Autopilot or request a demonstration, please book a meeting with us.

OpsMx is a leading provider of Continuous Delivery solutions that help enterprises safely deliver software at scale and without any human intervention. We help engineering teams take the risk and manual effort out of releasing innovations at the speed of modern business. For additional information, contact us.

0 Comments

Trackbacks/Pingbacks